Teaching and Research guides

Systematic reviews.

- Introduction

- Starting the review

- Research question

- Plan your search

- Sources to search

- Search example

- Screen and analyse

What is synthesis?

Quantitative synthesis (meta-analysis), qualitative synthesis.

- Further help

Synthesis is a stage in the systematic review process where extracted data (findings of individual studies) are combined and evaluated. The synthesis part of a systematic review will determine the outcomes of the review.

There are two commonly accepted methods of synthesis in systematic reviews:

- Quantitative data synthesis

- Qualitative data synthesis

The way the data is extracted from your studies and synthesised and presented depends on the type of data being handled.

If you have quantitative information, some of the more common tools used to summarise data include:

- grouping of similar data, i.e. presenting the results in tables

- charts, e.g. pie-charts

- graphical displays such as forest plots

If you have qualitative information, some of the more common tools used to summarise data include:

- textual descriptions, i.e. written words

- thematic or content analysis

Whatever tool/s you use, the general purpose of extracting and synthesising data is to show the outcomes and effects of various studies and identify issues with methodology and quality. This means that your synthesis might reveal a number of elements, including:

- overall level of evidence

- the degree of consistency in the findings

- what the positive effects of a drug or treatment are, and what these effects are based on

- how many studies found a relationship or association between two things

In a quantitative systematic review, data is presented statistically. Typically, this is referred to as a meta-analysis .

The usual method is to combine and evaluate data from multiple studies. This is normally done in order to draw conclusions about outcomes, effects, shortcomings of studies and/or applicability of findings.

Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

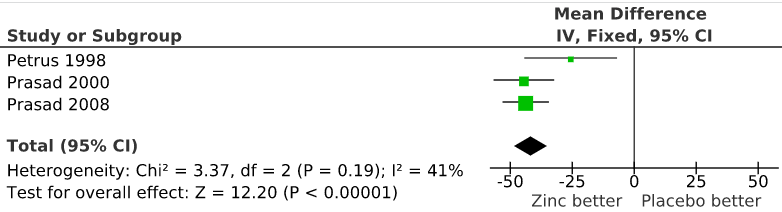

One way of accurately representing all of your data is in the form of a f orest plot . A forest plot is a way of combining results of multiple clinical trials in order to show point estimates arising from different studies of the same condition or treatment.

It is comprised of a graphical representation and often also a table. The graphical display shows the mean value for each trial and often with a confidence interval (the horizontal bars). Each mean is plotted relative to the vertical line of no difference.

- Forest Plots - Understanding a Meta-Analysis in 5 Minutes or Less (5:38 min) In this video, Dr. Maureen Dobbins, Scientific Director of the National Collaborating Centre for Methods and Tools, uses an example from social health to explain how to construct a forest plot graphic.

- How to interpret a forest plot (5:32 min) In this video, Terry Shaneyfelt, Clinician-educator at UAB School of Medicine, talks about how to interpret information contained in a typical forest plot, including table data.

- An introduction to meta-analysis (13 mins) Dr Christopher J. Carpenter introduces the concept of meta-analysis, a statistical approach to finding patterns and trends among research studies on the same topic. Meta-analysis allows the researcher to weight study results based on size, moderating variables, and other factors.

Journal articles

- Neyeloff, J. L., Fuchs, S. C., & Moreira, L. B. (2012). Meta-analyses and Forest plots using a microsoft excel spreadsheet: step-by-step guide focusing on descriptive data analysis. BMC Research Notes, 5(1), 52-57. https://doi.org/10.1186/1756-0500-5-52 Provides a step-by-step guide on how to use Excel to perform a meta-analysis and generate forest plots.

- Ried, K. (2006). Interpreting and understanding meta-analysis graphs: a practical guide. Australian Family Physician, 35(8), 635- 638. This article provides a practical guide to appraisal of meta-analysis graphs, and has been developed as part of the Primary Health Care Research Evaluation Development (PHCRED) capacity building program for training general practitioners and other primary health care professionals in research methodology.

In a qualitative systematic review, data can be presented in a number of different ways. A typical procedure in the health sciences is thematic analysis .

As explained by James Thomas and Angela Harden (2008) in an article for BMC Medical Research Methodology :

"Thematic synthesis has three stages:

- the coding of text 'line-by-line'

- the development of 'descriptive themes'

- and the generation of 'analytical themes'

While the development of descriptive themes remains 'close' to the primary studies, the analytical themes represent a stage of interpretation whereby the reviewers 'go beyond' the primary studies and generate new interpretive constructs, explanations or hypotheses" (p. 45).

A good example of how to conduct a thematic analysis in a systematic review is the following journal article by Jorgensen et al. (2108) on cancer patients. In it, the authors go through the process of:

(a) identifying and coding information about the selected studies' methodologies and findings on patient care

(b) organising these codes into subheadings and descriptive categories

(c) developing these categories into analytical themes

Jørgensen, C. R., Thomsen, T. G., Ross, L., Dietz, S. M., Therkildsen, S., Groenvold, M., Rasmussen, C. L., & Johnsen, A. T. (2018). What facilitates “patient empowerment” in cancer patients during follow-up: A qualitative systematic review of the literature. Qualitative Health Research, 28(2), 292-304. https://doi.org/10.1177/1049732317721477

Thomas, J., & Harden, A. (2008). Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Medical Research Methodology, 8(1), 45-54. https://doi.org/10.1186/1471-2288-8-45

- << Previous: Screen and analyse

- Next: Write >>

- Last Updated: Dec 18, 2024 5:08 PM

- URL: https://rmit.libguides.com/systematicreviews

Library Services

UCL LIBRARY SERVICES

- Guides and databases

- Library skills

- Systematic reviews

Synthesis and systematic maps

- What are systematic reviews?

- Types of systematic reviews

- Formulating a research question

- Identifying studies

- Searching databases

- Describing and appraising studies

- Software for systematic reviews

- Online training and support

- Live and face to face training

- Individual support

- Further help

On this page:

Types of synthesis

- Systematic evidence map

Synthesis is the process of combining the findings of research studies. A synthesis is also the product and output of the combined studies. This output may be a written narrative, a table, or graphical plots, including statistical meta-analysis. The process of combining studies and the way the output is reported varies according to the research question of the review.

In primary research there are many research questions and many different methods to address them. The same is true of systematic reviews. Two common and different types of review are those asking about the evidence of impact (effectiveness) of an intervention and those asking about ways of understanding a social phenomena.

If a systematic review question is about the effectiveness of an intervention, then the included studies are likely to be experimental studies that test whether an intervention is effective or not. These studies report evidence of the relative effect of an intervention compared to control conditions.

A synthesis of these types of studies aggregates the findings of the studies together. This produces an overall measure of effect of the intervention (after taking into account the sample sizes of the studies). This is a type of quantitative synthesis that is testing a hypothesis (that an intervention is effective) and the review methods are described in advance (using a deductive a priori paradigm).

- Ongoing developments in meta-analytic and quantitative synthesis methods: Broadening the types of research questions that can be addressed O'Mara-Eves, A. and Thomas, J. (2016). This paper discusses different types of quantitative synthesis in education research.

If a systematic review question is about ways of understanding a social phenomena, it iteratively analyses the findings of studies to develop overarching concepts, theories or themes. The included studies are likely to provide theories, concepts or insights about a phenomena. This might, for example, be studies trying to explain why patients do not always take the medicines provided to them by doctors.

A synthesis of these types of studies is an arrangement or configuration of the concepts from individual studies. It provides overall ‘meta’ concepts to help understand the phenomena under study. This type of qualitative or conceptual synthesis is more exploratory and some of the detailed methods may develop during the process of the review (using an inductive iterative paradigm).

- Methods for the synthesis of qualitative research: a critical review Barnett-Page and Thomas, (2009). This paper summarises some of the different approaches to qualitative synthesis.

There are also multi-component reviews that ask broad question with sub-questions using different review methods.

- Teenage pregnancy and social disadvantage: systematic review integrating controlled trials and qualitative studies. Harden et al (2009). An example of a review that combines two types of synthesis. It develops: 1) a statistical meta-analysis of controlled trials on interventions for early parenthood; and 2) a thematic synthesis of qualitative studies of young people views of early parenthood.

Systematic evidence maps

Systematic evidence maps are a product that describe the nature of research in an area. This is in contrast to a synthesis that provides uses research findings to make a statement about an evidence base. A 'systematic map' can both explain what has been studied and also indicate what has not been studied and where there are gaps in the research (gap maps). They can be useful to compare trends and differences across sets of studies.

Systematic maps can be a standalone finished product of research, without a synthesis, or may also be a component a systematic review that will synthesise studies.

A systematic map can help to plan a synthesis. It may be that the map shows that the studies to be synthesised are very different from each other, and it may be more appropriate to use a subset of the studies. Where a subset of studies is used in the synthesis, the review question and the boundaries of the review will need to be narrowed in order to provide a rigorous approach for selecting the sub-set of studies from the map. The studies in the map that are not synthesised can help with interpreting the synthesis and drawing conclusions. Please note that, confusingly, the 'scoping review' is sometimes used by people to describe systematic evidence maps and at other times to refer to reviews that are quick, selective scopes of the nature and size of literature in an area.

A systematic map may be published in different formats, such as a written report or database. Increasingly, maps are published as databases with interactive visualisations to enable the user to investigate and visualise different parts of the map. Living systematic maps are regularly updated so the evidence stays current.

Some examples of different maps are shown here:

- Women in Wage Labour: An evidence map of what works to increase female wage labour market participation in LMICs Filters Example of a systematic evidence map from the Africa Centre for Evidence.

- Acceptability and uptake of vaccines: Rapid map of systematic reviews Example of a map of systematic reviews.

- COVID-19: a living systematic map of the evidence Example of a living map of health research on COVID-19.

Meta-analysis

- What is a meta-analysis? Helpful resource from the University of Nottingham.

- MetaLight: software for teaching and learning meta-analysis Software tool that can help in learning about meta-analysis.

- KTDRR Research Evidence Training: An Overview of Effect Sizes and Meta-analysis Webcast video (56 mins). Overview of effect sizes and meta-analysis.

- << Previous: Describing and appraising studies

- Next: Software for systematic reviews >>

- Last Updated: Dec 18, 2024 2:33 PM

- URL: https://library-guides.ucl.ac.uk/systematic-reviews

A Guide to Evidence Synthesis: 11. Synthesize, Map, or Describe the Results

- Meet Our Team

- Our Published Reviews and Protocols

- What is Evidence Synthesis?

- Types of Evidence Synthesis

- Evidence Synthesis Across Disciplines

- Finding and Appraising Existing Systematic Reviews

- 0. Develop a Protocol

- 1. Draft your Research Question

- 2. Select Databases

- 3. Select Grey Literature Sources

- 4. Write a Search Strategy

- 5. Register a Protocol

- 6. Translate Search Strategies

- 7. Citation Management

- 8. Article Screening

- 9. Risk of Bias Assessment

- 10. Data Extraction

- 11. Synthesize, Map, or Describe the Results

- Evidence Synthesis Institute for Librarians

- Open Access Evidence Synthesis Resources

Synthesizing, Mapping, or Describing the Results

Synthesize, map, or describe the results.

In the data synthesis section, you need to present the main findings of your evidence synthesis . As an evidence synthesis summarizes existing research, there are a number of ways in which you can synthesize results from your included studies.

If the studies you have included in your evidence synthesis are sufficiently similar , or in other words homogenous, you can synthesize the data from these studies using a process called “meta-analysis”. As the name suggests, a meta-analysis uses a statistical approach to bring together results from multiple studies. There are many advantages to undertaking a meta-analysis. Cornell researchers can consult with the Cornell University Statistical Consulting Unit or attend a meta-analysis workshop for more information on this quantitative analysis.

If the studies you have included in your evidence synthesis are not similar (e.g. you have included different research designs due to diversity in the evidence base), then a meta-analysis is not possible. In this instance, you can synthesize the data from these studies using a process called “narrative or descriptive synthesis”.

A word of caution here – while the process underpinning meta-analysis is well established and standardized, the process underpinning narrative or descriptive synthesis is subjective and there is no one standard process for undertaking this.

In recent times, evidence syntheses of qualitative research is gaining popularity. Data synthesis in these studies may be termed as “meta-synthesis”. As with narrative or descriptive synthesis, there are a myriad of approaches to meta-synthesis.

(The above content courtesy of University of South Australia Library .)

Regardless of whether an evidence synthesis presents qualitative or quantitative information, reporting out using the PRISMA flow diagram is recommended. The PRISMA website and its many adaptations can be very helpful in understanding components of systematic reviews, meta-analyses and related evidence synthesis methods

Librarians can help write the methods section of your review for publication, to ensure clarity and transparency of the search process. However, we encourage evidence synthesis teams to engage statisticians to carry out their data syntheses.

- << Previous: 10. Data Extraction

- Next: Evidence Synthesis Institute for Librarians >>

- Last Updated: Dec 12, 2024 10:31 AM

- URL: https://guides.library.cornell.edu/evidence-synthesis

- NYU Medical Archives

- ☰ Menu

- Getting Started

- Subject Guides

- Classes & Events

Systematic Reviews

- Types of Reviews

- 1) Formulating a Research Question

- 2) Developing a Protocol

- 3) Searching for Studies

- 4) Screening

- 5) Data Extraction

- 6) Critical Appraisal

- 7) Synthesis and Summary

- 8) Reporting the Review Process

- Tools and Resources

- Library Support

Once you have selected the most reliable and relevant studies, you will need to combine all the findings of the individual studies using textual or statistical methods. The purpose of the synthesis is to answer your research question.

Synthesis involves pooling the extracted data from the included studies and summarizing the findings based on the overall strength of the evidence and consistency of observed effects.

Depending on your review type and objectives, forms of synthesis may include one or more of the following:

- Meta-analysis

- Qualitative

- Integrative

The synthesis method should be identified in your protocol.

Summary of Findings/Interpreting Results

After completing the steps of the review process, you can interpret the findings from the data synthesis, draw conclusions, recommend areas for further research, and write up your review.

- << Previous: 6) Critical Appraisal

- Next: 8) Reporting the Review Process >>

- Last Updated: Nov 25, 2024 1:57 PM

- URL: https://hslguides.med.nyu.edu/systematicreviews

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Synthesising the data

Synthesis is a stage in the systematic review process where extracted data, that is the findings of individual studies, are combined and evaluated.

The general purpose of extracting and synthesising data is to show the outcomes and effects of various studies, and to identify issues with methodology and quality. This means that your synthesis might reveal several elements, including:

- overall level of evidence

- the degree of consistency in the findings

- what the positive effects of a drug or treatment are , and what these effects are based on

- how many studies found a relationship or association between two components, e.g. the impact of disability-assistance animals on the psychological health of workplaces

There are two commonly accepted methods of synthesis in systematic reviews:

Qualitative data synthesis

- Quantitative data synthesis (i.e. meta-analysis)

The way the data is extracted from your studies, then synthesised and presented, depends on the type of data being handled.

In a qualitative systematic review, data can be presented in a number of different ways. A typical procedure in the health sciences is thematic analysis .

Thematic synthesis has three stages:

- the coding of text ‘line-by-line’

- the development of ‘descriptive themes’

- and the generation of ‘analytical themes’

If you have qualitative information, some of the more common tools used to summarise data include:

- textual descriptions, i.e. written words

- thematic or content analysis

Example qualitative systematic review

A good example of how to conduct a thematic analysis in a systematic review is the following journal article on cancer patients. In it, the authors go through the process of:

- identifying and coding information about the selected studies’ methodologies and findings on patient care

- organising these codes into subheadings and descriptive categories

- developing these categories into analytical themes

What Facilitates “Patient Empowerment” in Cancer Patients During Follow-Up: A Qualitative Systematic Review of the Literature

Quantitative data synthesis

In a quantitative systematic review, data is presented statistically. Typically, this is referred to as a meta-analysis .

The usual method is to combine and evaluate data from multiple studies. This is normally done in order to draw conclusions about outcomes, effects, shortcomings of studies and/or applicability of findings.

Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

If you have quantitative information, some of the more common tools used to summarise data include:

- grouping of similar data, i.e. presenting the results in tables

- charts, e.g. pie-charts

- graphical displays, i.e. forest plots

Example of a quantitative systematic review

A quantitative systematic review is a combination of qualitative and quantitative, usually referred to as a meta-analysis.

Effectiveness of Acupuncturing at the Sphenopalatine Ganglion Acupoint Alone for Treatment of Allergic Rhinitis: A Systematic Review and Meta-Analysis

About meta-analyses

A systematic review may sometimes include a meta-analysis , although it is not a requirement of a systematic review. Whereas, a meta-analysis also includes a systematic review.

A meta-analysis is a statistical analysis that combines data from previous studies to calculate an overall result.

One way of accurately representing all the data is in the form of a forest plot . A forest plot is a way of combining the results of multiple studies in order to show point estimates arising from different studies of the same condition or treatment.

It is comprised of a graphical representation and often also a table. The graphical display shows the mean value for each study and often with a confidence interval (the horizontal bars). Each mean is plotted relative to the vertical line of no difference.

The following is an example of the graphical representation of a forest plot.

“File:The effect of zinc acetate lozenges on the duration of the common cold.svg” by Harri Hemilä is licensed under CC BY 3.0

Watch the following short video where a social health example is used to explain how to construct a forest plot graphic.

Forest Plots: Understanding a Meta-Analysis in 5 Minutes or Less (5:38 mins)

Forest Plots – Understanding a Meta-Analysis in 5 Minutes or Less (5:38 min) by The NCCMT ( YouTube )

Test your knowledge

Research and Writing Skills for Academic and Graduate Researchers Copyright © 2022 by RMIT University is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Share This Book

Good review practice: a researcher guide to systematic review methodology in the sciences of food and health

- About this guide

- Part A: Systematic review method

- What are Good Practice points?

- Part C: The core steps of the SR process

- 1.1 Setting eligibility criteria

- 1.2 Identifying search terms

- 1.3 Protocol development

- 2. Searching for studies

- 3. Screening the results

- 4. Evaluation of included studies: quality assessment

- 5. Data extraction

- 6. Data synthesis and summary

- 7. Presenting results

- Links to current versions of the reference guidelines

- Download templates

- Food science databases

- Process management tools

- Screening tools

- Reference management tools

- Grey literature sources

- Links for access to protocol repository and platforms for registration

- Links for access to PRISMA frameworks

- Links for access to 'Risk of Bias' assessment tools for quantitative and qualitative studies

- Links for access to grading checklists

- Links for access to reporting checklists

- What questions are suitable for the systematic review methodology?

- How to assess feasibility of using the method?

- What is a scoping study and how to construct one?

- How to construct a systematic review protocol?

- How to construct a comprehensive search?

- Study designs and levels of evidence

- Download a pdf version This link opens in a new window

Data synthesis and summary

Data synthesis and summary .

Data synthesis includes synthesising the findings of primary studies and when possible or appropriate some forms of statistical analysis of numerical data. Synthesis methods vary depending on the nature of the evidence (e.g., quantitative, qualitative, or mixed), the aim of the review and the study types and designs. Reviewers have to decide and preselect a method of analysis based on the review question at the protocol development stage.

Synthesis Methods

Narrative summary : is a summary of the review results when meta-analysis is not possible. Narrative summaries describe the results of the review, but some can take a more interpretive approach in summarising the results . [8] These are known as " evidence statements " and can include the results of quality appraisal and weighting processes and provide the ratings of the studies.

Meta-analysis : is a quantitative synthesis of the results from included studies using statistical analysis methods that are extensions to those used in primary studies. [9] Meta-analysis can provide a more precise estimate of the outcomes by measuring and counting for uncertainty of outcomes from individual studies by means of statistical methods. However, it is not always feasible to conduct statistical analyses due to several reasons including inadequate data, heterogeneous data, poor quality of included studies and the level of complexity. [10]

Qualitative Data Synthesis (QDS) : is a method of identifying common themes across qualitative studies to create a great degree of conceptual development compared with narrative reviews. The key concepts are identified through a process that begins with interpretations of the primary findings reported to researchers which will then be interpreted to their views of the meaning in a second-order and finally interpreted by reviewers into explanations and generating hypotheses. [11]

Mixed methods synthesis: is an advanced method of data synthesis developed by EPPI-Centre to better understand the meanings of quantitative studies by conducting a parallel review of user evaluations to traditional systematic reviews and combining the findings of the syntheses to identify and provide clear directions in practice. [11]

- << Previous: 5. Data extraction

- Next: 7. Presenting results >>

- Last Updated: May 17, 2024 6:08 PM

- URL: https://ifis.libguides.com/systematic_reviews

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Establishing confidence in the output of qualitative research synthesis: the ConQual approach

Zachary munn, kylie porritt, craig lockwood, edoardo aromataris, alan pearson.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Received 2013 Dec 3; Accepted 2014 Sep 10; Collection date 2014.

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

The importance of findings derived from syntheses of qualitative research has been increasingly acknowledged. Findings that arise from qualitative syntheses inform questions of practice and policy in their own right and are commonly used to complement findings from quantitative research syntheses. The GRADE approach has been widely adopted by international organisations to rate the quality and confidence of the findings of quantitative systematic reviews. To date, there has been no widely accepted corresponding approach to assist health care professionals and policy makers in establishing confidence in the synthesised findings of qualitative systematic reviews.

A methodological group was formed develop a process to assess the confidence in synthesised qualitative research findings and develop a Summary of Findings tables for meta-aggregative qualitative systematic reviews.

Dependability and credibility are two elements considered by the methodological group to influence the confidence of qualitative synthesised findings. A set of critical appraisal questions are proposed to establish dependability, whilst credibility can be ranked according to the goodness of fit between the author’s interpretation and the original data. By following the processes outlined in this article, an overall ranking can be assigned to rate the confidence of synthesised qualitative findings, a system we have labelled ConQual.

Conclusions

The development and use of the ConQual approach will assist users of qualitative systematic reviews to establish confidence in the evidence produced in these types of reviews and can serve as a practical tool to assist in decision making.

Keywords: Qualitative systematic reviews, Confidence, Credibility, Summary of findings, Meta-aggregation

Across the health professions, the impetus to practice evidence-based healthcare has increased exponentially since the term ‘Evidence-Based Medicine’ was first coined in 1992 [ 1 ]. Evidence-based practice has been defined as ‘…the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients,…’ [ 2 ]. Rigorously conducted systematic reviews of the evidence are viewed as the pillar on which evidence-based healthcare rests and uniquely provide this foundation role by presenting health professionals with a comprehensive synthesis of the extant literature on a certain healthcare topic [ 2 – 4 ]. Evidence-based organisations such as the Cochrane Collaboration and the Joanna Briggs Institute (both established in the 1990s) were formed to develop methodologies and standards for the conduct of systematic reviews to inform decision making in healthcare [ 5 – 8 ]. Historically, systematic reviews have predominantly been undertaken to address questions regarding the effectiveness of interventions used in healthcare and therefore have required the analysis and synthesis of quantitative evidence. However, qualitative systematic reviews that bring together the findings of multiple, original qualitative studies, also have an important role in evidence-based healthcare. Qualitative systematic reviews can address questions to inform healthcare professionals about issues that cannot be answered with quantitative research and data [ 6 , 8 , 9 ].

Findings derived from qualitative research are increasingly acknowledged as important not only to accompany and support quantitative research findings to inform questions of practice and policy, but also to answer questions in their own right [ 10 ]. Qualitative systematic reviews aim to maximise the understanding of a wide range of healthcare issues that cannot be measured quantitatively; for example, they can inform understandings of how individuals and communities perceive health, manage their health and make decisions related to health service usage. Furthermore, syntheses of qualitative research can increase our understandings of the culture of communities, explore how service users experience illness and the health system, and evaluate components and activities of health services such as health promotion and community development.

Despite the increasing recognition of the importance of qualitative research to inform decision making in healthcare, historically the systematic review of qualitative research (or qualitative evidence/research synthesis) has been a highly contested topic. The debate regarding whether or not qualitative research can and should be synthesised has largely sided in support of synthesis. Despite this, there is still no international consensus to approach the combination of the findings of qualitative studies. This is evidenced by the numerous methodologies now available to incorporate and synthesise findings from qualitative research, [ 10 ] including for example meta-aggregation, meta-ethnography, realist synthesis, qualitative research synthesis and grounded theory, amongst others [ 5 , 8 , 11 , 12 ].

Meta-aggregation has been established as a methodology for qualitative research synthesis for over a decade and was initially developed by a group led by Pearson in the early 2000s [ 6 , 7 , 13 ]. An underlying premise adopted by this meta-aggregative development group was that regardless of the type of evidence being synthesised, systematic reviews should be conducted in the same fashion, regardless of the type of evidence the question posed demanded. The well-established steps in the systematic review process were then tailored to qualitative evidence to develop meta-aggregation as a method of synthesis following the same principles of systematic reviews of effectiveness, whilst being sensitive to the contextual nature of qualitative research and its traditions. The meta-aggregative method has been explicitly aligned with the philosophy of pragmatism in order to deliver readily usable synthesised findings to inform decision making at the clinical or policy level [ 10 ]. As a result, the meta-aggregative approach to qualitative synthesis is particularly suited for reviewers attempting to answer a specific question about healthcare practice or summarising a range of views regarding interventions or health issues [ 12 ]. This is in contrast to other recognised approaches to qualitative synthesis, such as meta-ethnography for example, which aim to develop explanatory theories or models [ 12 ]. There are now numerous examples of meta-aggregative systematic reviews available along with detailed guidance on how to conduct this type of systematic review [ 6 – 8 , 14 , 15 ].

There have been many methodological developments aimed at improving the conduct and reporting of systematic reviews since they were first introduced. Recently, multiple international organisations that conduct systematic reviews have endorsed the recommendations from the GRADE (Grading of Recommendations Assessment, Development and Evaluation) working group. The GRADE working group has developed a systematic process to establish and present the confidence in the synthesised results of quantitative research through considering issues related to study design, risk of bias, publication bias, inconsistency, indirectness, imprecision of evidence, effect sizes, dose–response relationships and confounders of findings [ 16 ]. The outcome of this approach is a GRADE score, labelled as High, Moderate, Low or Very Low, which represents the level of confidence in the synthesised findings. This score is then applied to the major results of a quantitative systematic review. Key findings and important supporting information are presented in a ‘Summary of Findings’ table (or evidence profile). The ‘Summary of Findings’ table has been shown to improve the understanding and the accessibility of results of systematic reviews [ 17 – 19 ].

The GRADE approach has been widely adopted by international organisations in the conduct of quantitative systematic reviews. However, to date, there has been no widely accepted approach to assist health care professionals and policy makers in establishing confidence in the synthesised findings of qualitative systematic reviews. In light of these developments to the quantitative systematic review process, at the beginning of 2013 a methodological group was formed to consider the meta-aggregative review process specifically with the directive to detail means of establishing confidence in the findings of qualitative systematic reviews and presentation of a Summary of Findings table. The results of these discussions and the newly proposed methodology for meta-aggregative systematic reviews are presented here for consideration and promotion of further debate.

The aim of the methodological group was twofold. Firstly, to investigate whether a system to assess the confidence in synthesised qualitative research findings using meta-aggregation could be established. Secondly, to determine and develop explicit guidance for a Summary of Findings table of qualitative systematic reviews undertaken using a meta-aggregative approach.

The working group established comprised researchers from the Joanna Briggs Institute in Adelaide, Australia, all with experience in conducting meta-aggregative reviews. Two of the authors of this paper were involved in the development of meta-aggregation as a method for qualitative research synthesis (AP and KP). Two of the authors have also been involved with the Cochrane Qualitative Research Methods group (AP and CL).

The working group met monthly to discuss, define and determine what confidence means in terms of synthesised qualitative findings, to create a format for a ‘Summary of Findings’ table including the degree of confidence for a qualitative synthesised finding and to test and refine the newly developed methods. Consensus was reached through discussion and testing. A Delphi-like process was initiated to further refine the tool. In August 2013 the newly proposed methodology was presented to the international members of the Scientific Committee of the Joanna Briggs Institute for further consideration, discussion and ultimately, approval. Following this, it was ratified at an Institute board meeting. In October 2013 the methodology was presented in two workshops during the Joanna Briggs Institute Convention. These workshops allowed international colleagues an opportunity to provide critique and feedback on the process that had been devised. In addition, the methodology was presented to the Joanna Briggs Institute Committee of Directors, comprising over 90 international experts in research synthesis from over 20 countries for further discussion and feedback. Many of the attendees were well-versed in qualitative research synthesis and particularly the meta-aggregative approach, and were therefore seen as the ideal audience to provide feedback and critique on the methodological development of the tool. A cyclic process of feedback and review was used at all stages of the development process. The proposed methodology has been labelled ‘ConQual’. With this process now completed the group believes the methodology requires publishing for further feedback and critique from the international community of reviewers.

Results and discussion

Meta-aggregation is a pragmatic approach to synthesis and therefore is ‘interested in how practical and useful the findings are’ [ 14 ] (p.1030). One way to improve the usefulness of the findings of a qualitative systematic review is to undertake a process to establish the confidence (defined as the belief, or trust, that a person can place in the results of the research) of these findings. Establishing confidence is of particular interest when conducting meta-aggregative synthesis as the output of this type of synthesis is ideally ‘lines of action’, which can be considered on an individual and a community level [ 10 ]. Furthermore, being explicit regarding the believability and trustworthiness of findings should be viewed as essential information for policymakers and others when considering any research findings to inform decisions in healthcare.

The working group began by considering the factors that increase or decrease the confidence in the synthesised findings of a qualitative synthesis. In the GRADE approach for quantitative research, these factors are the risk of bias, publication bias, inconsistency, indirectness, imprecision of evidence, effect size, dose–response relationships and confounders of findings [ 16 ].

After extensive debate it was agreed that there were two main elements that increased the confidence in the findings: their dependability and credibility as originally defined by Guba and Lincoln [ 20 ]. The group’s view of ‘confidence’ is similar (but not exact) to Guba and Lincoln’s ‘truth value’ of the findings of a particular inquiry [ 20 ]. The group defined ‘confidence’ as the belief, or trust, that a person can place in the results of research. Although Guba and Lincoln [ 20 ] mention other concepts (such as transferability and confirmability), it was the view of the group that these did not explicitly align with their notion of ‘confidence (i.e. truth value)’, and were more aligned to the concepts of ‘applicability’ and ‘neutrality’. The meta-aggregative approach currently incorporates methods to assess dependability and credibility and therefore there was an added practicality when deciding upon these two elements. It is worth noting however, that in the appraisal of the dependability of the research, issues of confirmability are also addressed and these are discussed further below.

The concepts of ‘dependability’ and ‘credibility’ are analogous with the ideas of ‘reliability’ and ‘internal validity’ in quantitative research. Credibility evaluates whether there is a ‘fit’ between the author’s interpretation and the original source data [ 21 ]. The concept of dependability is aligned with that of reliability in the rationalist paradigm, [ 20 ] and ‘implies trackable variability, that is, variability that can be ascribed to identified sources’ [ 22 ]. Dependability can be established if the research process is logical (i.e. are the methods suitable to answer the research question, and are they in line with the chosen methodology), traceable and clearly documented.

Determining dependability and credibility was the next challenge. The critical appraisal of qualitative research in the meta-aggregative review process was viewed as a way to assist in assessing dependability. Debate and differences of opinion continue to exist regarding the virtue of critical appraisal, or evaluation of the methodological quality of qualitative studies [ 6 , 23 , 24 ]. Amongst the many different methodologies that exist for the synthesis of qualitative findings, some demand critical appraisal whilst others do not [ 6 , 25 , 26 ].

In the meta-aggregative approach outlined by the Joanna Briggs Institute, critical appraisal is regarded as a pivotal part of the qualitative systematic review process. Critical appraisal can inform reviewers on which studies to include and can establish the quality and congruency of findings in included studies that may be used to inform healthcare practice [ 8 ]. In meta-aggregation the resultant synthesis is directly linked to all included studies. Therefore, the critical appraisal of primary studies and their subsequent inclusion or exclusion directly impacts the quality of the meta-synthesis [ 14 ].

In the meta-aggregative approach all studies included in the review are subject to a process of critical appraisal using the JBI- Qualitative Assessment and Review Instrument (JBI-QARI). This tool has been evaluated with two other critical appraisal tools for qualitative research in a comparative analysis with the authors concluding that the JBI-QARI tool was the most coherent [ 27 ].

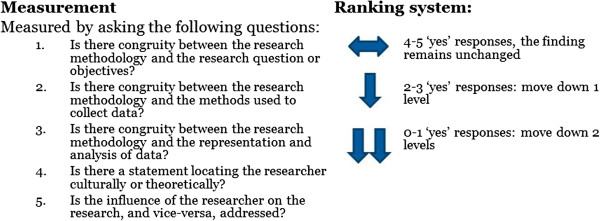

Five questions of this checklist [ 27 ] were viewed as specifically relating to the concept of dependability. These are:

Is there congruity between the research methodology and the research question or objectives?

Is there congruity between the research methodology and the methods used to collect data?

Is there congruity between the research methodology and the representation and analysis of data?

Is there a statement locating the researcher culturally or theoretically?

Is the influence of the researcher on the research, and vice-versa, addressed?

It is proposed that dependability of the qualitative research study can be established by assessing the studies in the review using the above criteria. While it is not explicitly stated that ‘confirmability’ is being assessed, in addition to credibility and dependability, these five questions also address issues of confirmability, clearly encompassing ‘reasons for formulating the study in a particular way, and implicit assumptions, biases, or prejudices’ [ 20 ] (p. 248).

The next challenge was to determine an appropriate way to establish credibility. Unlike the focus of critical appraisal commonly performed as part of the systematic review process, when assessing credibility of the findings, the focus was not on the full research undertaking, but more importantly shifted to the results of the authors interpretive analysis, more commonly referred to as ‘findings’ in the literature [ 9 , 28 ]. Although various definitions exist for what a finding is in qualitative research, in meta-aggregation, findings are defined as a verbatim extract of the author’s analytic interpretation accompanied by a participant voice, fieldwork observations or other data. The credibility of the finding can be established by assessing the congruency between the author’s interpretation and the supporting data. Each finding extracted from a research report can therefore be evaluated with a level of credibility based on the following ranking scale:

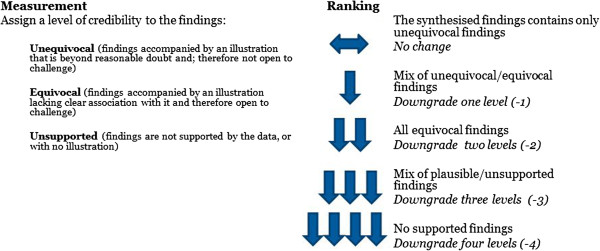

Unequivocal (findings accompanied by an illustration that is beyond reasonable doubt and; therefore not open to challenge).

Equivocal (findings accompanied by an illustration lacking clear association with it and therefore open to challenge).

Unsupported (findings are not supported by the data).

By following these steps a system to establish the dependability and credibility of an individual finding is possible. However, this approach does not provide an overall ranking for the final synthesised finding. The group then returned to the principles of GRADE to determine how an overall ranking might be addressed. Within GRADE, studies are given a pre-ranking of high (for randomised controlled trials) or low (for observational studies). It was the view of the group that distinguishing between different qualitative study designs, for example, a phenomenological study or an ethnographic study, via a hierarchy was not appropriate for qualitative studies; therefore, the it was decided that all qualitative research studies start with a ranking of ‘high’ on a scale of High, Moderate, Low to Very Low.

This ranking system then allows the findings of individual studies to be downgraded based on their dependability and credibility. Downgrading for dependability may occur when the five criteria for dependability are not met across the included studies (Figure 1 ). Where four to five of the responses to these questions are yes for an individual finding, then the finding will remain at its current level. If two to three of these responses are yes, it will move down one level (i.e. from High to Moderate). If zero to one of these responses are yes, it will move down two levels (from High to Low, or Moderate to Very Low). The synthesised finding may then be downgraded based on the aggregate level of dependability from across the included findings. For example, if the majority of individual findings have a ‘low’ level of dependability, this designation should then apply to the resultant synthesised finding.

Ranking for dependability. This figure represents how a score for dependability is developed during the ConQual process, and is based on the response to 5 critical appraisal questions.

Downgrading for credibility may occur when not all the findings included in a synthesised finding are considered unequivocal (Figure 2 ). For a mix of unequivocal/equivocal findings, the synthesised finding can be downgraded one (-1). For equivocal findings, the synthesised finding can be downgraded two (-2). For equivocal/unsupported findings, it can be downgraded three (-3), and for not-supported findings, it can be downgraded four (-4).

Ranking for credibility. This figure represents how a score for credibility is developed during the ConQual process, and is based on the congruency of the authors interpretation and the supporting data.

The proposed system would then give an overall score of High, Moderate, Low or Very Low. This ranking can be considered a rating of confidence in the qualitative synthesised finding, which we have called ‘ConQual’.

The second aim of the working party was to consider the use of a Summary of Findings table. It was agreed the Summary of Findings table would incorporate the key findings of the review along with the ConQual score. The Summary of Findings table includes the major elements of the review and details how the ConQual score is developed (Table 1 ). The aim of the group was to create a format for the Summary of Findings table that aligned with the structure utilised by GRADE for effectiveness reviews, while presenting all of the necessary information that a reader or policy maker would find useful. Therefore, included in the table is the title, population, phenomena of interest and context for the specific review. Each synthesised finding from the review is then presented along with the type of research informing it, a score for dependability, credibility and the overall ConQual score. The type of research column (i.e. qualitative) has been included to stress to users who are more familiar with quantitative research that this is coming from a different source. Additionally, there may be scope to apply this method to synthesised findings of other types of research, including text and opinion and discourse analysis. The Summary of Findings table has been developed to clearly convey the key findings to a reader of the review in a tabular format, with the aim of improving the accessibility and usefulness of the systematic review. This system has been trialled by the working party with a number of systematic reviews, with one example illustrated below in Table 1 .

ConQual summary of findings example

* Downgraded one level due to common dependability issues across the included primary studies (the majority of studies had no statement locating the researcher and no acknowledgement of their influence on the research).

** Downgraded one level due to a mix of unequivocal and equivocal findings.

There is a tool similar to ConQual currently in development called CerQual. The focus of this tool is to establish how much certainty (or confidence) to place in findings from qualitative evidence syntheses [ 29 – 31 ]. Certainty is described as ‘how likely it is that the review finding happened in the contexts of the included studies and could happen elsewhere’ [ 30 ]. The elements that contribute to the overall degree of certainty are the methodological quality of the study (in one review protocol this is determined by the Critical Appraisal Skills Programme quality assessment tool) [ 30 ] and the plausibility (or coherence, established when authors are able to ‘identify a clear pattern across the data contributed by each of the individual studies’) [ 31 ] of the review finding. The methodological quality of qualitative studies is linked to their dependability (as mentioned in Glenton’s protocol), which is similar to the ConQual approach. The two tools (ConQual and CerQual) share similar characteristics in the following ways; they both aim to provide a qualitative equivalent to the GRADE approach, they both propose a final ranking, and they both assess methodological quality or dependability. However, the main point of difference is that ConQual focuses on the credibility of the findings whereas CerQual focuses on the plausibility (or coherence) [ 32 ] of the findings. Due to this difference it is reasonable to suggest that both tools can be considered during the conduct of qualitative research synthesis, with ConQual particularly suited to meta-aggregative reviews. At the time of development and reporting of the ConQual system, we were not aware of any testing being conducted using the CerQual approach for meta-aggregative reviews or the ConQual approach for other types of qualitative research synthesis. In these early stages of development it is difficult to provide concrete guidance on when to choose either the ConQual or CerQual approach. Over time it may emerge more clearly when one tool should be used in preference to the other based on their relative merits. Those researchers conducting qualitative research syntheses should carefully consider which tool will best suit their purpose.

As is to be expected with new methodologies there are some limitations to the ConQual approach. The methods detailed within this paper were developed specifically for qualitative synthesis using meta-aggregation. In principle, other qualitative research synthesis methodologies could adopt this approach (perhaps with some slight modifications), as a ConQual ranking can be generated with any approach where findings are identifiable and the credibility of findings and dependability of research are able to be assessed. As with any new methodological developments there will almost certainly be opposing views. Potential criticism of the ConQual approach may exist around the use of an ordinal scale for ranking the confidence of qualitative research. However, we argue that this approach is not only appropriate, but above all practical. The process is a further step towards assisting policymakers and healthcare professionals in incorporating the evidence into healthcare related policy and decisions. The movement towards developing and establishing confidence in the synthesised findings of qualitative systematic reviews is a relatively new concept. It is hoped that this paper will stimulate discussion thereby improving and continuing development within this area.

The explicit aim of meta-aggregative reviews is to ensure that the final synthesised finding can be used as a basis to make recommendations for healthcare practice or inform policy. It is believed that the development and use of the ConQual approach will enable users of qualitative systematic reviews to establish confidence in the evidence produced in these types of reviews and serve as a practical tool to assist in decision making.

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

ZM, KP, CL and EA were all members of the working group developing this methodology. ZM convened the working group. AP provided input and conceptual advice throughout the development of the methodology. All authors read and approved the final manuscript.

Contributor Information

Zachary Munn, Email: [email protected].

Kylie Porritt, Email: [email protected].

Craig Lockwood, Email: [email protected].

Edoardo Aromataris, Email: [email protected].

Alan Pearson, Email: [email protected].

- 1. Guyatt G, Cairns J, Churchill D, Cook D, Haynes B, Hirsh J, Irvine J, Levine M, Levine M, Nishikawa J, Sackett D, Brill-Edwards P, Gerstein H, Gibson J, Jaeschke R, Kerigan A, Neville A, Panju A, Detsky A, Enkin M, Frid P, Gerrity M, Laupacis A, Lawrence V, Menard J, Moyer V, Mulrow C, Links P, Oxman A, Sinclair J, Tugwell P. Evidence-based medicine. A new approach to teaching the practice of medicine. JAMA. 1992;268:2420–2425. doi: 10.1001/jama.1992.03490170092032. [ DOI ] [ PubMed ] [ Google Scholar ]

- 2. Sackett D, Rosenberg W, Gray J, Haynes R, Richardson W. Evidence based medicine: what it is and what it isn't. BMJ. 1996;13:71–72. doi: 10.1136/bmj.312.7023.71. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Pearson A, Wiechula R, Court A, Lockwood C. The JBI model of evidence-based healthcare. Int J Evid Base Healthc. 2005;3:207–215. doi: 10.1111/j.1479-6988.2005.00026.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 4. Marshall G, Sykes AE. Radiography. 2010. Systematic reviews: a guide for radiographers and other health care professionals. [ Google Scholar ]

- 5. Noyes J, Popay J, Pearson A, Hannes K, Booth A. Qualitative research and Cochrane reviews. In: Higgins J, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions. Version 510 [updated March 2011] 2011. [ Google Scholar ]

- 6. Pearson A. Balancing the evidence: incorporating the synthesis of qualitative data into systematic reviews. JBI Reports. 2004;2:45–64. doi: 10.1111/j.1479-6988.2004.00008.x. [ DOI ] [ Google Scholar ]

- 7. Pearson A, Jordan Z, Munn Z. Translational science and evidence-based healthcare: a clarification and reconceptualization of how knowledge is generated and used in healthcare. Nurs Res Pract. 2012;2012:792519. doi: 10.1155/2012/792519. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 8. The Joanna Briggs Institute . Joanna Briggs Institute Reviewers’ Manual: 2011 edition. Adelaide: The Joanna Briggs Institute; 2011. [ Google Scholar ]

- 9. Sandelowski M, Leeman J. Writing usable qualitative health research findings. Qual Health Res. 2012;22:1404–1413. doi: 10.1177/1049732312450368. [ DOI ] [ PubMed ] [ Google Scholar ]

- 10. Hannes K, Lockwood C. Pragmatism as the philosophical foundation for the Joanna Briggs meta-aggregative approach to qualitative evidence synthesis. J Adv Nurs. 2011;67:1632–1642. doi: 10.1111/j.1365-2648.2011.05636.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Thorne S, Jensen L, Kearney MH, Noblit G, Sandelowski M. Qualitative metasynthesis: reflections on methodological orientation and ideological agenda. Qual Health Res. 2004;14:1342–1365. doi: 10.1177/1049732304269888. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Noyes J, Lewin S. Supplemental Guidance on Selecting a Method of Qualitative Evidence Synthesis, and Integrating Qualitative Evidence with Cochrane Intervention Reviews. In: Noyes J, Booth A, Hannes K, Harden A, Harries J, Lewin S, Lockwood C, editors. Supplementary Guidance for Inclusion of Qualitative Research in Cochrane Systematic Reviews of Interventions. 2011. [ Google Scholar ]

- 13. Munn Z, Kavanagh S, Lockwood C, Pearson A, Wood F. The development of an evidence based resource for burns care. Burns. 2013;39:577–582. doi: 10.1016/j.burns.2012.11.005. [ DOI ] [ PubMed ] [ Google Scholar ]

- 14. Korhonen A, Hakulinen‒Viitanen T, Jylhä V, Holopainen A. Meta‒synthesis and evidence‒based health care–a method for systematic review. Scand J Caring Sci. 2012;27(4):1027–1034. doi: 10.1111/scs.12003. [ DOI ] [ PubMed ] [ Google Scholar ]

- 15. Munn Z, Jordan Z. The patient experience of high technology medical imaging: a systematic review of the qualitative evidence. Radiography. 2011;17:323–331. doi: 10.1016/j.radi.2011.06.004. [ DOI ] [ PubMed ] [ Google Scholar ]

- 16. Goldet G, Howick J. Understanding GRADE: an introduction. J Evid Based Med. 2013;6:50–54. doi: 10.1111/jebm.12018. [ DOI ] [ PubMed ] [ Google Scholar ]

- 17. Langendam MW, Akl EA, Dahm P, Glasziou P, Guyatt G, Schunemann HJ. Assessing and presenting summaries of evidence in Cochrane Reviews. Syst Rev. 2013;2:81. doi: 10.1186/2046-4053-2-81. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 18. Rosenbaum SE, Glenton C, Oxman AD. Summary-of-findings tables in Cochrane reviews improved understanding and rapid retrieval of key information. J Clin Epidemiol. 2010;63:620–626. doi: 10.1016/j.jclinepi.2009.12.014. [ DOI ] [ PubMed ] [ Google Scholar ]

- 19. Treweek S, Oxman AD, Alderson P, Bossuyt PM, Brandt L, Brozek J, Davoli M, Flottorp S, Harbour R, Hill S, Liberati A, Liira H, Schünemann HJ, Rosenbaum S, Thornton J, Vandvik PO, Alonso-Coello P, DECIDE Consortium Developing and Evaluating Communication Strategies to Support Informed Decisions and Practice Based on Evidence (DECIDE): protocol and preliminary results. Implement Sci. 2013;8:6. doi: 10.1186/1748-5908-8-6. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 20. Guba EG, Lincoln YS. Epistemological and methodological bases of naturalistic inquiry. Educ Commun Technol. 1982;30:233–252. [ Google Scholar ]

- 21. Tobin GABC. Methodological rigour within a qualitative framework. J Adv Nurs. 2004;48:388–396. doi: 10.1111/j.1365-2648.2004.03207.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 22. Krefting L. Rigor in qualitative research: the assessment of trustworthiness. Am J Occup Ther. 1991;45:214–222. doi: 10.5014/ajot.45.3.214. [ DOI ] [ PubMed ] [ Google Scholar ]

- 23. Creswell JW. Qualitative Inquiry and Research Design: Choosing Among Five Approaches. 2. Thousand Oaks: Sage Publications, Inc; 2007. [ Google Scholar ]

- 24. Murphy F, Yielder J. Establishing rigour in qualitative radiography research. Radiography. 2010;16:62–67. doi: 10.1016/j.radi.2009.07.003. [ DOI ] [ Google Scholar ]

- 25. Tong A, Flemming K, McInnes E, Oliver S, Craig J. Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med Res Methodol. 2012;12:181. doi: 10.1186/1471-2288-12-181. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 26. Finfgeld DL. Metasynthesis: The State of the Art—So Far. Qual Health Res. 2003;13:893–904. doi: 10.1177/1049732303253462. [ DOI ] [ PubMed ] [ Google Scholar ]

- 27. Hannes K, Lockwood C, Pearson A. A comparative analysis of three online appraisal instruments' ability to assess validity in qualitative research. Qual Health Res. 2010;20:1736–1743. doi: 10.1177/1049732310378656. [ DOI ] [ PubMed ] [ Google Scholar ]

- 28. Sandelowski M, Barroso J. Finding the findings in qualitative studies. J Nurs Scholarsh. 2002;34:213–219. doi: 10.1111/j.1547-5069.2002.00213.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 29. Lewin S, Glenton C, Noyes J, Hendry M, Rashidian A. CerQual Approach: Assessing How Much Certainty to Place in Findings from Qualitative Evidence Syntheses. Quebec, Canada: 21st Cochrane Colloquium; 2013. [ Google Scholar ]

- 30. Glenton C, Colvin C, Carlsen B, Swartz A, Lewin S, Noyes J, Rashidian A. Barriers and facilitators to the implementation of lay health worker programmes to improve access to maternal and child health: qualitative evidence synthesis. Cochrane Database Syst Rev. 2013;2:CD010414. doi: 10.1002/14651858.CD010414.pub2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 31. Glenton C, Colvin CJ, Carlsen B, Swartz A, Lewin S, Noyes J, Rashidian A. Barriers and facilitators to the implementation of lay health worker programmes to improve access to maternal and child health: qualitative evidence synthesis. Cochrane Database Syst Rev. 2013;10:CD010414. doi: 10.1002/14651858.CD010414.pub2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 32. Glenton C, Lewin S, Carlsen B, Colvin C, Munthe-Kaas H, Noyes J, Rashidian A. Assessing the Certainty of Findings from Qualitative Evidence Syntheses: The CerQual Approach. Rome, Italy: Draft for discussion In GRADE Working Group Meeting; 2013. [ Google Scholar ]

Pre-publication history

- The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1471-2288/14/108/prepub

- View on publisher site

- PDF (562.0 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

Literature Reviews

- 5. Synthesize your findings

- Getting started

- Types of reviews

- 1. Define your research question

- 2. Plan your search

- 3. Search the literature

- 4. Organize your results

How to synthesize

Approaches to synthesis.

- 6. Write the review

- Artificial intelligence (AI) tools

- Thompson Writing Studio This link opens in a new window

- Need to write a systematic review? This link opens in a new window

Contact a Librarian

Ask a Librarian

In the synthesis step of a literature review, researchers analyze and integrate information from selected sources to identify patterns and themes. This involves critically evaluating findings, recognizing commonalities, and constructing a cohesive narrative that contributes to the understanding of the research topic.

Here are some examples of how to approach synthesizing the literature:

💡 By themes or concepts

🕘 Historically or chronologically

📊 By methodology

These organizational approaches can also be used when writing your review. It can be beneficial to begin organizing your references by these approaches in your citation manager by using folders, groups, or collections.

Create a synthesis matrix

A synthesis matrix allows you to visually organize your literature.

Topic: ______________________________________________

Topic: Chemical exposure to workers in nail salons

- << Previous: 4. Organize your results

- Next: 6. Write the review >>

- Last Updated: Dec 5, 2024 9:30 AM

- URL: https://guides.library.duke.edu/litreviews

Services for...

- Faculty & Instructors

- Graduate Students

- Undergraduate Students

- International Students

- Patrons with Disabilities

- Harmful Language Statement

- Re-use & Attribution / Privacy

- Support the Libraries

IMAGES

COMMENTS

5 days ago · Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

5 days ago · This is in contrast to a synthesis that provides uses research findings to make a statement about an evidence base. A 'systematic map' can both explain what has been studied and also indicate what has not been studied and where there are gaps in the research (gap maps). They can be useful to compare trends and differences across sets of studies.

Dec 12, 2024 · As an evidence synthesis summarizes existing research, there are a number of ways in which you can synthesize results from your included studies. If the studies you have included in your evidence synthesis are sufficiently similar , or in other words homogenous, you can synthesize the data from these studies using a process called “meta ...

Nov 25, 2024 · Synthesis involves pooling the extracted data from the included studies and summarizing the findings based on the overall strength of the evidence and consistency of observed effects. Depending on your review type and objectives, forms of synthesis may include one or more of the following:

Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

May 17, 2024 · Data synthesis includes synthesising the findings of primary studies and when possible or appropriate some forms of statistical analysis of numerical data. Synthesis methods vary depending on the nature of the evidence (e.g., quantitative, qualitative, or mixed), the aim of the review and the study types and designs.

Increasingly it is accepted that decisions should not be based on the findings from single primary studies but rather informed by actionable messages derived from synthesised evidence based on systematic reviews. 3 4 5 Over the past decade there has been substantial public funding of synthesised evidence and guidance to support healthcare ...

Oct 11, 2017 · A meta-study is a specific research approach in which the theory, methods and findings of qualitative research are analysed and synthesised to develop new ways of thinking about a topic. A meta-synthesis uses interpretive methods to synthesise the findings from primary studies, which can often vary in important respects (such as populations or ...

The type of research column (i.e. qualitative) has been included to stress to users who are more familiar with quantitative research that this is coming from a different source. Additionally, there may be scope to apply this method to synthesised findings of other types of research, including text and opinion and discourse analysis.

Dec 5, 2024 · In the synthesis step of a literature review, researchers analyze and integrate information from selected sources to identify patterns and themes. This involves critically evaluating findings, recognizing commonalities, and constructing a cohesive narrative that contributes to the understanding of the research topic.