SpeechRecognition 3.12.0

pip install SpeechRecognition Copy PIP instructions

Released: Dec 8, 2024

Library for performing speech recognition, with support for several engines and APIs, online and offline.

Verified details

Maintainers.

Unverified details

Project links.

- License: BSD License (BSD)

- Author: Anthony Zhang (Uberi)

- Tags speech, recognition, voice, sphinx, google, wit, bing, api, houndify, ibm, snowboy

- Requires: Python >=3.9

- Provides-Extra: dev , audio , pocketsphinx , whisper-local , openai , groq , assemblyai

Classifiers

- 5 - Production/Stable

- OSI Approved :: BSD License

- MacOS :: MacOS X

- Microsoft :: Windows

- POSIX :: Linux

- Python :: 3

- Python :: 3.9

- Python :: 3.10

- Python :: 3.11

- Python :: 3.12

- Multimedia :: Sound/Audio :: Speech

- Software Development :: Libraries :: Python Modules

Project description

UPDATE 2022-02-09 : Hey everyone! This project started as a tech demo, but these days it needs more time than I have to keep up with all the PRs and issues. Therefore, I’d like to put out an open invite for collaborators - just reach out at me @ anthonyz . ca if you’re interested!

Speech recognition engine/API support:

Quickstart: pip install SpeechRecognition . See the “Installing” section for more details.

To quickly try it out, run python -m speech_recognition after installing.

Project links:

Library Reference

The library reference documents every publicly accessible object in the library. This document is also included under reference/library-reference.rst .

See Notes on using PocketSphinx for information about installing languages, compiling PocketSphinx, and building language packs from online resources. This document is also included under reference/pocketsphinx.rst .

You have to install Vosk models for using Vosk. Here are models avaiable. You have to place them in models folder of your project, like “your-project-folder/models/your-vosk-model”

See the examples/ directory in the repository root for usage examples:

First, make sure you have all the requirements listed in the “Requirements” section.

The easiest way to install this is using pip install SpeechRecognition .

Otherwise, download the source distribution from PyPI , and extract the archive.

In the folder, run python setup.py install .

Requirements

To use all of the functionality of the library, you should have:

The following requirements are optional, but can improve or extend functionality in some situations:

The following sections go over the details of each requirement.

The first software requirement is Python 3.9+ . This is required to use the library.

PyAudio (for microphone users)

PyAudio is required if and only if you want to use microphone input ( Microphone ). PyAudio version 0.2.11+ is required, as earlier versions have known memory management bugs when recording from microphones in certain situations.

If not installed, everything in the library will still work, except attempting to instantiate a Microphone object will raise an AttributeError .

The installation instructions on the PyAudio website are quite good - for convenience, they are summarized below:

PyAudio wheel packages for common 64-bit Python versions on Windows and Linux are included for convenience, under the third-party/ directory in the repository root. To install, simply run pip install wheel followed by pip install ./third-party/WHEEL_FILENAME (replace pip with pip3 if using Python 3) in the repository root directory .

PocketSphinx-Python (for Sphinx users)

PocketSphinx-Python is required if and only if you want to use the Sphinx recognizer ( recognizer_instance.recognize_sphinx ).

PocketSphinx-Python wheel packages for 64-bit Python 3.4, and 3.5 on Windows are included for convenience, under the third-party/ directory . To install, simply run pip install wheel followed by pip install ./third-party/WHEEL_FILENAME (replace pip with pip3 if using Python 3) in the SpeechRecognition folder.

On Linux and other POSIX systems (such as OS X), run pip install SpeechRecognition[pocketsphinx] . Follow the instructions under “Building PocketSphinx-Python from source” in Notes on using PocketSphinx for installation instructions.

Note that the versions available in most package repositories are outdated and will not work with the bundled language data. Using the bundled wheel packages or building from source is recommended.

Vosk (for Vosk users)

Vosk API is required if and only if you want to use Vosk recognizer ( recognizer_instance.recognize_vosk ).

You can install it with python3 -m pip install vosk .

You also have to install Vosk Models:

Here are models avaiable for download. You have to place them in models folder of your project, like “your-project-folder/models/your-vosk-model”

Google Cloud Speech Library for Python (for Google Cloud Speech API users)

Google Cloud Speech library for Python is required if and only if you want to use the Google Cloud Speech API ( recognizer_instance.recognize_google_cloud ).

If not installed, everything in the library will still work, except calling recognizer_instance.recognize_google_cloud will raise an RequestError .

According to the official installation instructions , the recommended way to install this is using Pip : execute pip install google-cloud-speech (replace pip with pip3 if using Python 3).

FLAC (for some systems)

A FLAC encoder is required to encode the audio data to send to the API. If using Windows (x86 or x86-64), OS X (Intel Macs only, OS X 10.6 or higher), or Linux (x86 or x86-64), this is already bundled with this library - you do not need to install anything .

Otherwise, ensure that you have the flac command line tool, which is often available through the system package manager. For example, this would usually be sudo apt-get install flac on Debian-derivatives, or brew install flac on OS X with Homebrew.

Whisper (for Whisper users)

Whisper is required if and only if you want to use whisper ( recognizer_instance.recognize_whisper ).

You can install it with python3 -m pip install SpeechRecognition[whisper-local] .

OpenAI Whisper API (for OpenAI Whisper API users)

The library openai is required if and only if you want to use OpenAI Whisper API ( recognizer_instance.recognize_openai ).

You can install it with python3 -m pip install SpeechRecognition[openai] .

Please set the environment variable OPENAI_API_KEY before calling recognizer_instance.recognize_openai .

Groq Whisper API (for Groq Whisper API users)

The library groq is required if and only if you want to use Groq Whisper API ( recognizer_instance.recognize_groq ).

You can install it with python3 -m pip install SpeechRecognition[groq] .

Please set the environment variable GROQ_API_KEY before calling recognizer_instance.recognize_groq .

Troubleshooting

The recognizer tries to recognize speech even when i’m not speaking, or after i’m done speaking..

Try increasing the recognizer_instance.energy_threshold property. This is basically how sensitive the recognizer is to when recognition should start. Higher values mean that it will be less sensitive, which is useful if you are in a loud room.

This value depends entirely on your microphone or audio data. There is no one-size-fits-all value, but good values typically range from 50 to 4000.

Also, check on your microphone volume settings. If it is too sensitive, the microphone may be picking up a lot of ambient noise. If it is too insensitive, the microphone may be rejecting speech as just noise.

The recognizer can’t recognize speech right after it starts listening for the first time.

The recognizer_instance.energy_threshold property is probably set to a value that is too high to start off with, and then being adjusted lower automatically by dynamic energy threshold adjustment. Before it is at a good level, the energy threshold is so high that speech is just considered ambient noise.

The solution is to decrease this threshold, or call recognizer_instance.adjust_for_ambient_noise beforehand, which will set the threshold to a good value automatically.

The recognizer doesn’t understand my particular language/dialect.

Try setting the recognition language to your language/dialect. To do this, see the documentation for recognizer_instance.recognize_sphinx , recognizer_instance.recognize_google , recognizer_instance.recognize_wit , recognizer_instance.recognize_bing , recognizer_instance.recognize_api , recognizer_instance.recognize_houndify , and recognizer_instance.recognize_ibm .

For example, if your language/dialect is British English, it is better to use "en-GB" as the language rather than "en-US" .

The recognizer hangs on recognizer_instance.listen ; specifically, when it’s calling Microphone.MicrophoneStream.read .

This usually happens when you’re using a Raspberry Pi board, which doesn’t have audio input capabilities by itself. This causes the default microphone used by PyAudio to simply block when we try to read it. If you happen to be using a Raspberry Pi, you’ll need a USB sound card (or USB microphone).

Once you do this, change all instances of Microphone() to Microphone(device_index=MICROPHONE_INDEX) , where MICROPHONE_INDEX is the hardware-specific index of the microphone.

To figure out what the value of MICROPHONE_INDEX should be, run the following code:

This will print out something like the following:

Now, to use the Snowball microphone, you would change Microphone() to Microphone(device_index=3) .

Calling Microphone() gives the error IOError: No Default Input Device Available .

As the error says, the program doesn’t know which microphone to use.

To proceed, either use Microphone(device_index=MICROPHONE_INDEX, ...) instead of Microphone(...) , or set a default microphone in your OS. You can obtain possible values of MICROPHONE_INDEX using the code in the troubleshooting entry right above this one.

The program doesn’t run when compiled with PyInstaller .

As of PyInstaller version 3.0, SpeechRecognition is supported out of the box. If you’re getting weird issues when compiling your program using PyInstaller, simply update PyInstaller.

You can easily do this by running pip install --upgrade pyinstaller .

On Ubuntu/Debian, I get annoying output in the terminal saying things like “bt_audio_service_open: […] Connection refused” and various others.

The “bt_audio_service_open” error means that you have a Bluetooth audio device, but as a physical device is not currently connected, we can’t actually use it - if you’re not using a Bluetooth microphone, then this can be safely ignored. If you are, and audio isn’t working, then double check to make sure your microphone is actually connected. There does not seem to be a simple way to disable these messages.

For errors of the form “ALSA lib […] Unknown PCM”, see this StackOverflow answer . Basically, to get rid of an error of the form “Unknown PCM cards.pcm.rear”, simply comment out pcm.rear cards.pcm.rear in /usr/share/alsa/alsa.conf , ~/.asoundrc , and /etc/asound.conf .

For “jack server is not running or cannot be started” or “connect(2) call to /dev/shm/jack-1000/default/jack_0 failed (err=No such file or directory)” or “attempt to connect to server failed”, these are caused by ALSA trying to connect to JACK, and can be safely ignored. I’m not aware of any simple way to turn those messages off at this time, besides entirely disabling printing while starting the microphone .

On OS X, I get a ChildProcessError saying that it couldn’t find the system FLAC converter, even though it’s installed.

Installing FLAC for OS X directly from the source code will not work, since it doesn’t correctly add the executables to the search path.

Installing FLAC using Homebrew ensures that the search path is correctly updated. First, ensure you have Homebrew, then run brew install flac to install the necessary files.

To hack on this library, first make sure you have all the requirements listed in the “Requirements” section.

To install/reinstall the library locally, run python -m pip install -e .[dev] in the project root directory .

Before a release, the version number is bumped in README.rst and speech_recognition/__init__.py . Version tags are then created using git config gpg.program gpg2 && git config user.signingkey DB45F6C431DE7C2DCD99FF7904882258A4063489 && git tag -s VERSION_GOES_HERE -m "Version VERSION_GOES_HERE" .

Releases are done by running make-release.sh VERSION_GOES_HERE to build the Python source packages, sign them, and upload them to PyPI.

To run all the tests:

To run static analysis:

To ensure RST is well-formed:

Testing is also done automatically by GitHub Actions, upon every push.

FLAC Executables

The included flac-win32 executable is the official FLAC 1.3.2 32-bit Windows binary .

The included flac-linux-x86 and flac-linux-x86_64 executables are built from the FLAC 1.3.2 source code with Manylinux to ensure that it’s compatible with a wide variety of distributions.

The built FLAC executables should be bit-for-bit reproducible. To rebuild them, run the following inside the project directory on a Debian-like system:

The included flac-mac executable is extracted from xACT 2.39 , which is a frontend for FLAC 1.3.2 that conveniently includes binaries for all of its encoders. Specifically, it is a copy of xACT 2.39/xACT.app/Contents/Resources/flac in xACT2.39.zip .

Please report bugs and suggestions at the issue tracker !

How to cite this library (APA style):

Zhang, A. (2017). Speech Recognition (Version 3.11) [Software]. Available from https://github.com/Uberi/speech_recognition#readme .

How to cite this library (Chicago style):

Zhang, Anthony. 2017. Speech Recognition (version 3.11).

Also check out the Python Baidu Yuyin API , which is based on an older version of this project, and adds support for Baidu Yuyin . Note that Baidu Yuyin is only available inside China.

Copyright 2014-2017 Anthony Zhang (Uberi) . The source code for this library is available online at GitHub .

SpeechRecognition is made available under the 3-clause BSD license. See LICENSE.txt in the project’s root directory for more information.

For convenience, all the official distributions of SpeechRecognition already include a copy of the necessary copyright notices and licenses. In your project, you can simply say that licensing information for SpeechRecognition can be found within the SpeechRecognition README, and make sure SpeechRecognition is visible to users if they wish to see it .

SpeechRecognition distributes source code, binaries, and language files from CMU Sphinx . These files are BSD-licensed and redistributable as long as copyright notices are correctly retained. See speech_recognition/pocketsphinx-data/*/LICENSE*.txt and third-party/LICENSE-Sphinx.txt for license details for individual parts.

SpeechRecognition distributes source code and binaries from PyAudio . These files are MIT-licensed and redistributable as long as copyright notices are correctly retained. See third-party/LICENSE-PyAudio.txt for license details.

SpeechRecognition distributes binaries from FLAC - speech_recognition/flac-win32.exe , speech_recognition/flac-linux-x86 , and speech_recognition/flac-mac . These files are GPLv2-licensed and redistributable, as long as the terms of the GPL are satisfied. The FLAC binaries are an aggregate of separate programs , so these GPL restrictions do not apply to the library or your programs that use the library, only to FLAC itself. See LICENSE-FLAC.txt for license details.

Project details

Release history release notifications | rss feed.

Dec 8, 2024

Oct 20, 2024

May 5, 2024

Mar 30, 2024

Mar 28, 2024

Dec 6, 2023

Mar 13, 2023

Dec 4, 2022

Dec 5, 2017

Jun 27, 2017

Apr 13, 2017

Mar 11, 2017

Jan 7, 2017

Nov 21, 2016

May 22, 2016

May 11, 2016

May 10, 2016

Apr 9, 2016

Apr 4, 2016

Apr 3, 2016

Mar 5, 2016

Mar 4, 2016

Feb 26, 2016

Feb 20, 2016

Feb 19, 2016

Feb 4, 2016

Nov 5, 2015

Nov 2, 2015

Sep 2, 2015

Sep 1, 2015

Aug 30, 2015

Aug 24, 2015

Jul 26, 2015

Jul 12, 2015

Jul 3, 2015

May 20, 2015

Apr 24, 2015

Apr 14, 2015

Apr 7, 2015

Apr 5, 2015

Apr 4, 2015

Mar 31, 2015

Dec 10, 2014

Nov 17, 2014

Sep 11, 2014

Sep 6, 2014

Aug 25, 2014

Jul 6, 2014

Jun 10, 2014

Jun 9, 2014

May 29, 2014

Apr 23, 2014

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Dec 8, 2024 Source

Built Distribution

Uploaded Dec 8, 2024 Python 3

File details

Details for the file speechrecognition-3.12.0.tar.gz .

File metadata

- Download URL: speechrecognition-3.12.0.tar.gz

- Upload date: Dec 8, 2024

- Size: 32.9 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.0.1 CPython/3.12.1

File hashes

See more details on using hashes here.

Details for the file SpeechRecognition-3.12.0-py3-none-any.whl .

- Download URL: SpeechRecognition-3.12.0-py3-none-any.whl

- Size: 32.8 MB

- Tags: Python 3

- português (Brasil)

Supported by

- Español – América Latina

- Português – Brasil

- Documentation

- 2.29.0 (latest)

Python Client for Cloud Speech

Cloud Speech : enables easy integration of Google speech recognition technologies into developer applications. Send audio and receive a text transcription from the Speech-to-Text API service.

Client Library Documentation

Product Documentation

Quick Start

In order to use this library, you first need to go through the following steps:

Select or create a Cloud Platform project.

Enable billing for your project.

Enable the Cloud Speech.

Setup Authentication.

Installation

Install this library in a virtual environment using venv . venv is a tool that creates isolated Python environments. These isolated environments can have separate versions of Python packages, which allows you to isolate one project’s dependencies from the dependencies of other projects.

With venv , it’s possible to install this library without needing system install permissions, and without clashing with the installed system dependencies.

Code samples and snippets

Code samples and snippets live in the samples/ folder.

Supported Python Versions

Our client libraries are compatible with all current active and maintenance versions of Python.

Python >= 3.7

Unsupported Python Versions

Python <= 3.6

If you are using an end-of-life version of Python, we recommend that you update as soon as possible to an actively supported version.

Read the Client Library Documentation for Cloud Speech to see other available methods on the client.

Read the Cloud Speech Product documentation to learn more about the product and see How-to Guides.

View this README to see the full list of Cloud APIs that we cover.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-12-19 UTC.

- Data Science

- Data Science Projects

- Data Analysis

- Data Visualization

- Machine Learning

- ML Projects

- Deep Learning

- Computer Vision

- Artificial Intelligence

Speech Recognition Module Python

Speech recognition, a field at the intersection of linguistics, computer science, and electrical engineering, aims at designing systems capable of recognizing and translating spoken language into text. Python, known for its simplicity and robust libraries, offers several modules to tackle speech recognition tasks effectively. In this article, we'll explore the essence of speech recognition in Python, including an overview of its key libraries, how they can be implemented, and their practical applications.

Key Python Libraries for Speech Recognition

- SpeechRecognition : One of the most popular Python libraries for recognizing speech. It provides support for several engines and APIs, such as Google Web Speech API, Microsoft Bing Voice Recognition, and IBM Speech to Text. It's known for its ease of use and flexibility, making it a great starting point for beginners and experienced developers alike.

- PyAudio : Essential for audio input and output in Python, PyAudio provides Python bindings for PortAudio, the cross-platform audio I/O library. It's often used alongside SpeechRecognition to capture microphone input for real-time speech recognition.

- DeepSpeech : Developed by Mozilla, DeepSpeech is an open-source deep learning-based voice recognition system that uses models trained on the Baidu's Deep Speech research project. It's suitable for developers looking to implement more sophisticated speech recognition features with the power of deep learning.

Implementing Speech Recognition with Python

A basic implementation using the SpeechRecognition library involves several steps:

- Audio Capture : Capturing audio from the microphone using PyAudio.

- Audio Processing : Converting the audio signal into data that the SpeechRecognition library can work with.

- Recognition : Calling the recognize_google() method (or another available recognition method) on the SpeechRecognition library to convert the audio data into text.

Here's a simple example:

Practical Applications

Speech recognition has a wide range of applications:

- Voice-activated Assistants: Creating personal assistants like Siri or Alexa.

- Accessibility Tools: Helping individuals with disabilities interact with technology.

- Home Automation: Enabling voice control over smart home devices.

- Transcription Services: Automatically transcribing meetings, lectures, and interviews.

Challenges and Considerations

While implementing speech recognition, developers might face challenges such as background noise interference, accents, and dialects. It's crucial to consider these factors and test the application under various conditions. Furthermore, privacy and ethical considerations must be addressed, especially when handling sensitive audio data.

Speech recognition in Python offers a powerful way to build applications that can interact with users in natural language. With the help of libraries like SpeechRecognition, PyAudio, and DeepSpeech, developers can create a range of applications from simple voice commands to complex conversational interfaces. Despite the challenges, the potential for innovative applications is vast, making speech recognition an exciting area of development in Python.

FAQ on Speech Recognition Module in Python

What is the speech recognition module in python.

The Speech Recognition module, often referred to as SpeechRecognition, is a library that allows Python developers to convert spoken language into text by utilizing various speech recognition engines and APIs. It supports multiple services like Google Web Speech API, Microsoft Bing Voice Recognition, IBM Speech to Text, and others.

How can I install the Speech Recognition module?

You can install the Speech Recognition module by running the following command in your terminal or command prompt: pip install SpeechRecognition For capturing audio from the microphone, you might also need to install PyAudio. On most systems, this can be done via pip: pip install PyAudio

Do I need an internet connection to use the Speech Recognition module?

Yes, for most of the supported APIs like Google Web Speech, Microsoft Bing Voice Recognition, and IBM Speech to Text, an active internet connection is required. However, if you use the CMU Sphinx engine, you do not need an internet connection as it operates offline.

Similar Reads

- Speech Recognition Module Python Speech recognition, a field at the intersection of linguistics, computer science, and electrical engineering, aims at designing systems capable of recognizing and translating spoken language into text. Python, known for its simplicity and robust libraries, offers several modules to tackle speech rec 4 min read

- PyTorch for Speech Recognition Speech recognition is a transformative technology that enables computers to understand and interpret spoken language, fostering seamless interaction between humans and machines. By implementing algorithms and machine learning techniques, speech recognition systems transcribe spoken words into text, 5 min read

- Speech Recognition in Python using CMU Sphinx "Hey, Siri!", "Okay, Google!" and "Alexa playing some music" are some of the words that have become an integral part of our life as giving voice commands to our virtual assistants make our life a lot easier. But have you ever wondered how these devices are giving commands via voice/speech? Do applic 5 min read

- Python subprocess module The subprocess module present in Python(both 2.x and 3.x) is used to run new applications or programs through Python code by creating new processes. It also helps to obtain the input/output/error pipes as well as the exit codes of various commands. In this tutorial, we’ll delve into how to effective 9 min read

- How to Set Up Speech Recognition on Windows? Windows 11 and Windows 10, allow users to control their computer entirely with voice commands, allowing them to navigate, launch applications, dictate text, and perform other tasks. Originally designed for people with disabilities who cannot use a mouse or keyboard. In this article, We'll show you H 5 min read

- Python word2number Module Converting words to numbers becomes a quite challenging task in any programming language. Python's Word2Number module emerges as a handy solution, facilitating the conversion of words denoting numbers into their corresponding numerical values with ease. In this article, we will see what is the word2 2 min read

- 5 Best AI Tools for Speech Recognition in 2024 Voice recognition software is becoming more important across various industries, changing how we interact with technology. From transcribing meetings to controlling smart devices, these tools are integral to enhancing productivity and accessibility. Not everyone can type easily, or at all. That's wh 8 min read

- Installing Python telnetlib module In this article, we are going to see how to install the telnetlib library in Python. The telnetlib module provides a Telnet class that implements the Telnet protocol. If you have Python installed, the telnetlib library is already installed, but if it isn't, we can use the pip command to install it. 1 min read

- Audio Recognition in Tensorflow This article discusses audio recognition and also covers an implementation of a simple audio recognizer in Python using the TensorFlow library which recognizes eight different words. Audio RecognitionAudio recognition comes under the automatic speech recognition (ASR) task which works on understandi 8 min read

- How to Install a Python Module? A module is simply a file containing Python code. Functions, groups, and variables can all be described in a module. Runnable code can also be used in a module. What is a Python Module?A module can be imported by multiple programs for their application, hence a single code can be used by multiple pr 4 min read

- Python Module Index Python has a vast ecosystem of modules and packages. These modules enable developers to perform a wide range of tasks without taking the headache of creating a custom module for them to perform a particular task. Whether we have to perform data analysis, set up a web server, or automate tasks, there 4 min read

- Why is Python So Popular? One question always comes into people's minds Why Python is so popular? As we know Python, the high-level, versatile programming language, has witnessed an unprecedented surge in popularity over the years. From web development to data science and artificial intelligence, Python has become the go-to 7 min read

- Telnet Automation / Scripting Using Python Telnet is the short form of Teletype network, which is a client/server application that works based on the telnet protocol. Telnet service is associated with the well-known port number - 23. As Python supports socket programming, we can implement telnet services as well. In this article, we will lea 5 min read

- How to create modules in Python 3 ? Modules are simply python code having functions, classes, variables. Any python file with .py extension can be referenced as a module. Although there are some modules available through the python standard library which are installed through python installation, Other modules can be installed using t 4 min read

- Build a Song Transcriptor App Using Python In today's digital landscape, audio files play a significant role in various aspects of our lives, from entertainment to education. However, extracting valuable information or content from audio recordings can be challenging. In this article, we will learn how to build a Song Transcriber application 4 min read

- How to Play and Record Audio in Python? As python can mostly do everything one can imagine including playing and recording audio. This article will make you familiar with some python libraries and straight-forwards methods using those libraries for playing and recording sound in python, with some more functionalities in exchange for few e 9 min read

- Resolve "No Module Named Encoding" in Python One common error that developers may encounter is the "No Module Named 'Encodings'" error. This error can be frustrating, especially for beginners, but understanding its origins and learning how to resolve it is crucial for a smooth Python development experience. What is Module Named 'Encodings'?The 3 min read

- Where Does Python Look for Modules? Modules are simply a python .py file from which we can use functions, classes, variables in another file. To use these things in another file we need to first import that module in that file and then we can use them. Modules can exist in various directories. In this article, we will discuss where do 3 min read

- Send an SMS Message with Python In today's fastest-growing world, SMS is still a powerful tool by which we can reach billions of users and one can establish a connection globally. In this new world when instant messaging and social media are dominating you can feel our humble SMS outdated but you don't underestimate its power, it 4 min read

- Python Framework

- AI-ML-DS With Python

- Python-Library

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Using the Speech-to-Text API with Python

1. overview.

The Speech-to-Text API enables developers to convert audio to text in over 125 languages and variants, by applying powerful neural network models in an easy to use API.

In this tutorial, you will focus on using the Speech-to-Text API with Python.

What you'll learn

- How to set up your environment

- How to transcribe audio files in English

- How to transcribe audio files with word timestamps

- How to transcribe audio files in different languages

What you'll need

- A Google Cloud project

- A browser, such as Chrome or Firefox

- Familiarity using Python

How will you use this tutorial?

How would you rate your experience with python, how would you rate your experience with google cloud services, 2. setup and requirements, self-paced environment setup.

- Sign-in to the Google Cloud Console and create a new project or reuse an existing one. If you don't already have a Gmail or Google Workspace account, you must create one .

- The Project name is the display name for this project's participants. It is a character string not used by Google APIs. You can always update it.

- The Project ID is unique across all Google Cloud projects and is immutable (cannot be changed after it has been set). The Cloud Console auto-generates a unique string; usually you don't care what it is. In most codelabs, you'll need to reference your Project ID (typically identified as PROJECT_ID ). If you don't like the generated ID, you might generate another random one. Alternatively, you can try your own, and see if it's available. It can't be changed after this step and remains for the duration of the project.

- For your information, there is a third value, a Project Number , which some APIs use. Learn more about all three of these values in the documentation .

- Next, you'll need to enable billing in the Cloud Console to use Cloud resources/APIs. Running through this codelab won't cost much, if anything at all. To shut down resources to avoid incurring billing beyond this tutorial, you can delete the resources you created or delete the project. New Google Cloud users are eligible for the $300 USD Free Trial program.

Start Cloud Shell

While Google Cloud can be operated remotely from your laptop, in this codelab you will be using Cloud Shell , a command line environment running in the Cloud.

Activate Cloud Shell

If this is your first time starting Cloud Shell, you're presented with an intermediate screen describing what it is. If you were presented with an intermediate screen, click Continue .

It should only take a few moments to provision and connect to Cloud Shell.

This virtual machine is loaded with all the development tools needed. It offers a persistent 5 GB home directory and runs in Google Cloud, greatly enhancing network performance and authentication. Much, if not all, of your work in this codelab can be done with a browser.

Once connected to Cloud Shell, you should see that you are authenticated and that the project is set to your project ID.

- Run the following command in Cloud Shell to confirm that you are authenticated:

Command output

- Run the following command in Cloud Shell to confirm that the gcloud command knows about your project:

If it is not, you can set it with this command:

3. Environment setup

Before you can begin using the Speech-to-Text API, run the following command in Cloud Shell to enable the API:

You should see something like this:

Now, you can use the Speech-to-Text API!

Navigate to your home directory:

Create a Python virtual environment to isolate the dependencies:

Activate the virtual environment:

Install IPython and the Speech-to-Text API client library:

Now, you're ready to use the Speech-to-Text API client library!

In the next steps, you'll use an interactive Python interpreter called IPython , which you installed in the previous step. Start a session by running ipython in Cloud Shell:

You're ready to make your first request...

4. Transcribe audio files

In this section, you will transcribe an English audio file.

Copy the following code into your IPython session:

Take a moment to study the code and see how it uses the recognize client library method to transcribe an audio file*.* The config parameter indicates how to process the request and the audio parameter specifies the audio data to be recognized.

Send a request:

You should see the following output:

Update the configuration to enable automatic punctuation and send a new request:

In this step, you were able to transcribe an audio file in English, using different parameters, and print out the result. You can read more about transcribing audio files .

5. Get word timestamps

Speech-to-Text can detect time offsets (timestamps) for the transcribed audio. Time offsets show the beginning and end of each spoken word in the supplied audio. A time offset value represents the amount of time that has elapsed from the beginning of the audio, in increments of 100ms.

To transcribe an audio file with word timestamps, update your code by copying the following into your IPython session:

Take a moment to study the code and see how it transcribes an audio file with word timestamps*.* The enable_word_time_offsets parameter tells the API to return the time offsets for each word (see the doc for more details).

In this step, you were able to transcribe an audio file in English with word timestamps and print the result. Read more about getting word timestamps .

6. Transcribe different languages

The Speech-to-Text API recognizes more than 125 languages and variants! You can find a list of supported languages here .

In this section, you will transcribe a French audio file.

To transcribe the French audio file, update your code by copying the following into your IPython session:

In this step, you were able to transcribe a French audio file and print the result. You can read more about the supported languages .

7. Congratulations!

You learned how to use the Speech-to-Text API using Python to perform different kinds of transcription on audio files!

To clean up your development environment, from Cloud Shell:

- If you're still in your IPython session, go back to the shell: exit

- Stop using the Python virtual environment: deactivate

- Delete your virtual environment folder: cd ~ ; rm -rf ./venv-speech

To delete your Google Cloud project, from Cloud Shell:

- Retrieve your current project ID: PROJECT_ID=$(gcloud config get-value core/project)

- Make sure this is the project you want to delete: echo $PROJECT_ID

- Delete the project: gcloud projects delete $PROJECT_ID

- Test the demo in your browser: https://cloud.google.com/speech-to-text

- Speech-to-Text documentation: https://cloud.google.com/speech-to-text/docs

- Python on Google Cloud: https://cloud.google.com/python

- Cloud Client Libraries for Python: https://github.com/googleapis/google-cloud-python

This work is licensed under a Creative Commons Attribution 2.0 Generic License.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Creating a Voice Assistant with Python and Google Speech Recognition: A Step-by-Step Guide

- Step 1: Setting Up Your Environment

- Step 2: Understanding the Basics of Speech Recognition

- Step 3: Adding Text-to-Speech Capabilities

- Step 4: Combining Speech Recognition and Text-to-Speech

- Step 5: Handling Offline Recognition (Optional)

- Step 6: Putting It All Together

- Flowchart for the Voice Assistant

Creating a voice assistant is a fascinating project that combines natural language processing, machine learning, and a bit of magic to make your computer understand and respond to your voice commands. In this article, we’ll dive into the world of speech recognition using Python and Google’s powerful Speech Recognition API. Buckle up, because we’re about to embark on a journey to create your very own voice assistant!

Step 1: Setting Up Your Environment #

Before we start coding, we need to set up our environment. You’ll need Python 3 installed on your machine, along with a few essential libraries. Here’s how you can get everything ready:

If you’re using a virtual environment, make sure to activate it first. This will help keep your dependencies organized and avoid any potential conflicts with other projects.

Step 2: Understanding the Basics of Speech Recognition #

Speech recognition is the process of converting spoken words into text. Google’s Speech Recognition API is one of the most accurate and widely used APIs for this purpose. Here’s a simple example to get you started:

This code snippet initializes a Recognizer object, listens to the microphone, and then uses Google’s API to convert the spoken words into text.

Step 3: Adding Text-to-Speech Capabilities #

To make our voice assistant more interactive, we need to add text-to-speech capabilities. The pyttsx3 library is perfect for this job. Here’s how you can integrate it:

Step 4: Combining Speech Recognition and Text-to-Speech #

Now that we have both speech recognition and text-to-speech working, let’s combine them to create a simple voice assistant. Here’s a more comprehensive example:

Step 5: Handling Offline Recognition (Optional) #

If you want your voice assistant to work offline, you can use the Vosk library. Here’s how you can integrate offline recognition:

Step 6: Putting It All Together #

Here’s the complete code for a voice assistant that uses both online and offline speech recognition:

Flowchart for the Voice Assistant #

Here’s a flowchart to help visualize the process:

Conclusion #

Creating a voice assistant with Python and Google Speech Recognition is a fun and rewarding project. With these steps, you’ve taken the first leap into the world of natural language processing and speech recognition. Remember, practice makes perfect, so don’t be afraid to experiment and add more features to your voice assistant. Happy coding

Speech Recognition Using Google Speech API and Python

Introduction: Speech Recognition Using Google Speech API and Python

Speech Recognition

Speech Recognition is a part of Natural Language Processing which is a subfield of Artificial Intelligence. To put it simply, speech recognition is the ability of a computer software to identify words and phrases in spoken language and convert them to human readable text. It is used in several applications such as voice assistant systems, home automation, voice based chatbots, voice interacting robot, artificial intelligence and etc.

There are different APIs(Application Programming Interface) for recognizing speech. They offer services either free or paid. These are:

- Google Speech Recognition

- Google Cloud Speech API

- Microsoft Bing Voice Recognition

- Houndify API

- IBM Speech To Text

- Snowboy Hotword Detection

We will be using Google Speech Recognition here, as it doesn't require any API key. This tutorial aims to provide an introduction on how to use Google Speech Recognition library on Python with the help of external microphone like ReSpeaker USB 4-Mic Array from Seeed Studio . Although it is not mandatory to use external microphone, even built-in microphone of laptop can be used.

Step 1: ReSpeaker USB 4-Mic Array

The ReSpeaker USB Mic is a quad-microphone device designed for AI and voice applications, which was developed by Seeed Studio . It has 4 high performance, built-in omnidirectional microphones designed to pick up your voice from anywhere in the room and 12 programmable RGB LED indicators. The ReSpeaker USB mic supports Linux, macOS, and Windows operating systems. Details can be found here .

The ReSpeaker USB Mic comes in a nice package containing the following items:

- A user guide

- ReSpeaker USB Mic Array

- Micro USB to USB Cable

So we're ready to get started.

Step 2: Install Required Libraries

For this tutorial, I’ll assume you are using Python 3.x.

Let's install the libraries:

For macOS, first you will need to install PortAudio with Homebrew , and then install PyAudio with pip3:

We run below command to install pyaudio

For Linux, you can install PyAudio with apt:

For Windows, you can install PyAudio with pip:

Create a new python file

Paste on get_index.py below code snippet:

Run the following command:

In my case, command gives following output to screen:

Change device_index to index number as per your choice in below code snippet.

Device index was chosen 1 due to ReSpeaker 4 Mic Array will be as a main source.

Step 3: Text-to-speech in Python With Pyttsx3 Library

There are several APIs available to convert text to speech in python. One of such APIs is the pyttsx3, which is the best available text-to-speech package in my opinion. This package works in Windows, Mac, and Linux. Check the official documentation to see how this is done.

Install the package Use pip to install the package.

If you are in Windows, you will need an additional package, pypiwin32 which it will need to access the native Windows speech API.

Convert text to speech python script Below is the code snippet for text to speech using pyttsx3 :

import pyttsx3

engine = pyttsx3.init()

engine.setProperty('rate', 150) # Speed percent

engine.setProperty('volume', 0.9) # Volume 0-1

engine.say("Hello, world!")

engine.runAndWait()

Step 4: Putting It All Together: Building Speech Recognition With Python Using Google Speech Recognition API and Pyttsx3 Library

The below code is responsible for recognising human speech using Google Speech Recognition , and converting the text into speech using pyttsx3 library.

It prints output on terminal. Also, it will be converted into speech as well.

I hope you now have better understanding of how speech recognition works in general and most importantly, how to implement that using Google Speech Recognition API with Python.

If you have any questions or feedback? Leave a comment below. Stay tuned!

Python Speech Recognition With Google Speech

In this article i want to show you an example of Python Speech Recognition With Google Speech, so Speech Recognition is a library for performing speech recognition, with support for several engines and APIs, online and offline.

Read More on Python GUI

1: PyQt5 GUI Development Tutorials

2: Pyside2 GUI Development Tutorials

3: wxPython GUI Development Tutorials

4: Kivy GUI Development Tutorials

What is Google Speech?

Google Speech library provides a simple and easy-to-use Python interface to different speech recognition engines and APIs, including Google Speech Recognition. you can use SpeechRecognition library in your Python programs to transcribe spoken audio into text. It supports multiple recognition engines, including Google Speech Recognition, which allows you to leverage Google’s powerful speech recognition capabilities in your applications.

This is a brief overview of what you can do with the SpeechRecognition library:

- Audio Input : Capture audio input from different sources such as microphones, audio files, or online streams.

- Speech Recognition : Use the library to recognize and transcribe spoken audio into text in real-time or from recorded audio files.

- Multiple Recognition Engines : Support for multiple recognition engines, including Google Speech Recognition, CMU Sphinx, and more, and it allows you to choose the one that best fits your needs.

- Language Support : Recognize speech in multiple languages and dialects, depending on the capabilities of the underlying recognition engine.

- Simple Interface : Provides a straightforward API for performing speech recognition tasks, making it easy to integrate into your Python applications.

Speech recognition engine/API support

- CMU Sphinx (works offline)

- Google Speech Recognition

- Google Cloud Speech API

- Microsoft Bing Voice Recognition

- Houndify API

- IBM Speech to Text

- Snowboy Hotword Detection (works offline)

How to Install Google Speech?

You can use pip for the installation of Google Speech.

Also you need to install PyAudio

Google Requirements

- Python 2.6, 2.7, or 3.3+ (required)

- PyAudio 0.2.11+ (required only if you need to use microphone input, Microphone )

- PocketSphinx (required only if you need to use the Sphinx recognizer, recognizer_instance.recognize_sphinx )

- Google API Client Library for Python (required only if you need to use the Google Cloud Speech API, recognizer_instance.recognize_google_cloud )

- FLAC encoder (required only if the system is not x86-based Windows/Linux/OS X)

So now this is the complete code for Python Speech Recognition With Google Speech

This Python code utilizes the speech_recognition library to capture audio input from the default microphone, transcribe it into text using Google Speech Recognition, and save both the audio and the recognized text. It handles errors that may occur during the speech recognition process and provides instructions to install the necessary pyaudio module if it’s missing.

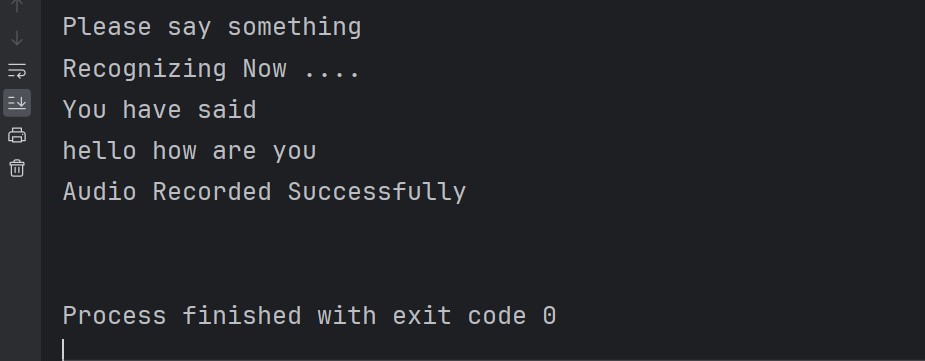

Run the the code and say something, this will be the result

Q: How do I use Google speech recognition in Python?

A: You can use Google speech recognition in Python using the speech_recognition library. This library provides a simple interface to different speech recognition engines and APIs, including Google Speech Recognition. You can install the library using pip install SpeechRecognition and after that use its recognize_google() function to perform speech recognition using Google’s service.

Q: How do I make Python auto speech recognition?

A: To make Python perform auto speech recognition, you can use speech_recognition library along with the pyaudio library for capturing audio input from the microphone. You can create a loop to continuously listen for audio input, perform speech recognition on the captured audio, and then take appropriate actions based on the recognized speech.

How to use Google TTS API in Python?

To use the Google Text-to-Speech (TTS) API in Python, you can use the gTTS library. This library provides a simple interface to the Google TTS API, and it allows you to convert text into speech. You can install the library using pip install gTTS and then use its gTTS() function to create speech from text.

Q: Is Python good for speech recognition?

A: Yes, Python is well suited for speech recognition tasks. It has several libraries available, such as speech_recognition, pyaudio, and gTTS, that make it easy to perform speech recognition, capture audio input and generate speech output. Python is simple and easy language, also there are different libraries that you can use for speech recognition.

Subscribe and Get Free Video Courses & Articles in your Email

If it was useful, Please share the article

1 thought on “Python Speech Recognition With Google Speech”

Hello Parwiz,

Referring to your youtube video – PyQt5 Audio To Text Converter With Speech Recognition Library.

How do I input the audio (.wav file) once it is converted to an .exe application? Can you pls help me with the necessary code? E.g. Select .wav audio then transcribe it as text.

I am a Psychology student with no technical background but just interested in learning coding 🙂 I Find your videos very useful… Looking forward to your help.

Thank you Rahul

Leave a Comment Cancel reply

IMAGES

COMMENTS

Dec 8, 2024 · Google API Client Library for Python (required only if you need to use the Google Cloud Speech API, recognizer_instance.recognize_google_cloud) FLAC encoder (required only if the system is not x86-based Windows/Linux/OS X)

Dec 13, 2024 · Python Client for Cloud Speech Cloud Speech : enables easy integration of Google speech recognition technologies into developer applications. Send audio and receive a text transcription from the Speech-to-Text API service.

Aug 7, 2024 · Python Speech Recognition module: sudo pip install SpeechRecognition . PyAudio: Use the following command for Linux users . sudo apt-get install python-pyaudio python3-pyaudio. If the versions in the repositories are too old, install pyaudio using the following command

Mar 19, 2024 · What is the Speech Recognition module in Python? The Speech Recognition module, often referred to as SpeechRecognition, is a library that allows Python developers to convert spoken language into text by utilizing various speech recognition engines and APIs. It supports multiple services like Google Web Speech API, Microsoft Bing Voice ...

Installing collected packages: ..., ipython, google-cloud-speech Successfully installed ... google-cloud-speech-2.25.1 ... Now, you're ready to use the Speech-to-Text API client library! If you're setting up your own Python development environment outside of Cloud Shell, you can follow these guidelines.

Some of these packages—such as wit and apiai—offer built-in features, like natural language processing for identifying a speaker’s intent, which go beyond basic speech recognition. Others, like google-cloud-speech, focus solely on speech-to-text conversion. There is one package that stands out in terms of ease-of-use: SpeechRecognition.

Dec 14, 2024 · Building a Voice Assistant with Python and Google’s Speech Recognition API is a fascinating project that combines natural language processing, machine learning, and computer vision. This tutorial will guide you through the process of creating a basic voice assistant using Python and Google’s Speech Recognition API.

Sep 16, 2024 · Creating a voice assistant is a fascinating project that combines natural language processing, machine learning, and a bit of magic to make your computer understand and respond to your voice commands. In this article, we’ll dive into the world of speech recognition using Python and Google’s powerful Speech Recognition API. Buckle up, because we’re about to embark on a journey to create ...

We will be using Google Speech Recognition here, as it doesn't require any API key. This tutorial aims to provide an introduction on how to use Google Speech Recognition library on Python with the help of external microphone like ReSpeaker USB 4-Mic Array from Seeed Studio. Although it is not mandatory to use external microphone, even built-in ...

Apr 4, 2024 · What is Google Speech? Google Speech library provides a simple and easy-to-use Python interface to different speech recognition engines and APIs, including Google Speech Recognition. you can use SpeechRecognition library in your Python programs to transcribe spoken audio into text.