Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 25 January 2021

Online education in the post-COVID era

- Barbara B. Lockee 1

Nature Electronics volume 4 , pages 5–6 ( 2021 ) Cite this article

144k Accesses

250 Citations

336 Altmetric

Metrics details

- Science, technology and society

The coronavirus pandemic has forced students and educators across all levels of education to rapidly adapt to online learning. The impact of this — and the developments required to make it work — could permanently change how education is delivered.

The COVID-19 pandemic has forced the world to engage in the ubiquitous use of virtual learning. And while online and distance learning has been used before to maintain continuity in education, such as in the aftermath of earthquakes 1 , the scale of the current crisis is unprecedented. Speculation has now also begun about what the lasting effects of this will be and what education may look like in the post-COVID era. For some, an immediate retreat to the traditions of the physical classroom is required. But for others, the forced shift to online education is a moment of change and a time to reimagine how education could be delivered 2 .

Looking back

Online education has traditionally been viewed as an alternative pathway, one that is particularly well suited to adult learners seeking higher education opportunities. However, the emergence of the COVID-19 pandemic has required educators and students across all levels of education to adapt quickly to virtual courses. (The term ‘emergency remote teaching’ was coined in the early stages of the pandemic to describe the temporary nature of this transition 3 .) In some cases, instruction shifted online, then returned to the physical classroom, and then shifted back online due to further surges in the rate of infection. In other cases, instruction was offered using a combination of remote delivery and face-to-face: that is, students can attend online or in person (referred to as the HyFlex model 4 ). In either case, instructors just had to figure out how to make it work, considering the affordances and constraints of the specific learning environment to create learning experiences that were feasible and effective.

The use of varied delivery modes does, in fact, have a long history in education. Mechanical (and then later electronic) teaching machines have provided individualized learning programmes since the 1950s and the work of B. F. Skinner 5 , who proposed using technology to walk individual learners through carefully designed sequences of instruction with immediate feedback indicating the accuracy of their response. Skinner’s notions formed the first formalized representations of programmed learning, or ‘designed’ learning experiences. Then, in the 1960s, Fred Keller developed a personalized system of instruction 6 , in which students first read assigned course materials on their own, followed by one-on-one assessment sessions with a tutor, gaining permission to move ahead only after demonstrating mastery of the instructional material. Occasional class meetings were held to discuss concepts, answer questions and provide opportunities for social interaction. A personalized system of instruction was designed on the premise that initial engagement with content could be done independently, then discussed and applied in the social context of a classroom.

These predecessors to contemporary online education leveraged key principles of instructional design — the systematic process of applying psychological principles of human learning to the creation of effective instructional solutions — to consider which methods (and their corresponding learning environments) would effectively engage students to attain the targeted learning outcomes. In other words, they considered what choices about the planning and implementation of the learning experience can lead to student success. Such early educational innovations laid the groundwork for contemporary virtual learning, which itself incorporates a variety of instructional approaches and combinations of delivery modes.

Online learning and the pandemic

Fast forward to 2020, and various further educational innovations have occurred to make the universal adoption of remote learning a possibility. One key challenge is access. Here, extensive problems remain, including the lack of Internet connectivity in some locations, especially rural ones, and the competing needs among family members for the use of home technology. However, creative solutions have emerged to provide students and families with the facilities and resources needed to engage in and successfully complete coursework 7 . For example, school buses have been used to provide mobile hotspots, and class packets have been sent by mail and instructional presentations aired on local public broadcasting stations. The year 2020 has also seen increased availability and adoption of electronic resources and activities that can now be integrated into online learning experiences. Synchronous online conferencing systems, such as Zoom and Google Meet, have allowed experts from anywhere in the world to join online classrooms 8 and have allowed presentations to be recorded for individual learners to watch at a time most convenient for them. Furthermore, the importance of hands-on, experiential learning has led to innovations such as virtual field trips and virtual labs 9 . A capacity to serve learners of all ages has thus now been effectively established, and the next generation of online education can move from an enterprise that largely serves adult learners and higher education to one that increasingly serves younger learners, in primary and secondary education and from ages 5 to 18.

The COVID-19 pandemic is also likely to have a lasting effect on lesson design. The constraints of the pandemic provided an opportunity for educators to consider new strategies to teach targeted concepts. Though rethinking of instructional approaches was forced and hurried, the experience has served as a rare chance to reconsider strategies that best facilitate learning within the affordances and constraints of the online context. In particular, greater variance in teaching and learning activities will continue to question the importance of ‘seat time’ as the standard on which educational credits are based 10 — lengthy Zoom sessions are seldom instructionally necessary and are not aligned with the psychological principles of how humans learn. Interaction is important for learning but forced interactions among students for the sake of interaction is neither motivating nor beneficial.

While the blurring of the lines between traditional and distance education has been noted for several decades 11 , the pandemic has quickly advanced the erasure of these boundaries. Less single mode, more multi-mode (and thus more educator choices) is becoming the norm due to enhanced infrastructure and developed skill sets that allow people to move across different delivery systems 12 . The well-established best practices of hybrid or blended teaching and learning 13 have served as a guide for new combinations of instructional delivery that have developed in response to the shift to virtual learning. The use of multiple delivery modes is likely to remain, and will be a feature employed with learners of all ages 14 , 15 . Future iterations of online education will no longer be bound to the traditions of single teaching modes, as educators can support pedagogical approaches from a menu of instructional delivery options, a mix that has been supported by previous generations of online educators 16 .

Also significant are the changes to how learning outcomes are determined in online settings. Many educators have altered the ways in which student achievement is measured, eliminating assignments and changing assessment strategies altogether 17 . Such alterations include determining learning through strategies that leverage the online delivery mode, such as interactive discussions, student-led teaching and the use of games to increase motivation and attention. Specific changes that are likely to continue include flexible or extended deadlines for assignment completion 18 , more student choice regarding measures of learning, and more authentic experiences that involve the meaningful application of newly learned skills and knowledge 19 , for example, team-based projects that involve multiple creative and social media tools in support of collaborative problem solving.

In response to the COVID-19 pandemic, technological and administrative systems for implementing online learning, and the infrastructure that supports its access and delivery, had to adapt quickly. While access remains a significant issue for many, extensive resources have been allocated and processes developed to connect learners with course activities and materials, to facilitate communication between instructors and students, and to manage the administration of online learning. Paths for greater access and opportunities to online education have now been forged, and there is a clear route for the next generation of adopters of online education.

Before the pandemic, the primary purpose of distance and online education was providing access to instruction for those otherwise unable to participate in a traditional, place-based academic programme. As its purpose has shifted to supporting continuity of instruction, its audience, as well as the wider learning ecosystem, has changed. It will be interesting to see which aspects of emergency remote teaching remain in the next generation of education, when the threat of COVID-19 is no longer a factor. But online education will undoubtedly find new audiences. And the flexibility and learning possibilities that have emerged from necessity are likely to shift the expectations of students and educators, diminishing further the line between classroom-based instruction and virtual learning.

Mackey, J., Gilmore, F., Dabner, N., Breeze, D. & Buckley, P. J. Online Learn. Teach. 8 , 35–48 (2012).

Google Scholar

Sands, T. & Shushok, F. The COVID-19 higher education shove. Educause Review https://go.nature.com/3o2vHbX (16 October 2020).

Hodges, C., Moore, S., Lockee, B., Trust, T. & Bond, M. A. The difference between emergency remote teaching and online learning. Educause Review https://go.nature.com/38084Lh (27 March 2020).

Beatty, B. J. (ed.) Hybrid-Flexible Course Design Ch. 1.4 https://go.nature.com/3o6Sjb2 (EdTech Books, 2019).

Skinner, B. F. Science 128 , 969–977 (1958).

Article Google Scholar

Keller, F. S. J. Appl. Behav. Anal. 1 , 79–89 (1968).

Darling-Hammond, L. et al. Restarting and Reinventing School: Learning in the Time of COVID and Beyond (Learning Policy Institute, 2020).

Fulton, C. Information Learn. Sci . 121 , 579–585 (2020).

Pennisi, E. Science 369 , 239–240 (2020).

Silva, E. & White, T. Change The Magazine Higher Learn. 47 , 68–72 (2015).

McIsaac, M. S. & Gunawardena, C. N. in Handbook of Research for Educational Communications and Technology (ed. Jonassen, D. H.) Ch. 13 (Simon & Schuster Macmillan, 1996).

Irvine, V. The landscape of merging modalities. Educause Review https://go.nature.com/2MjiBc9 (26 October 2020).

Stein, J. & Graham, C. Essentials for Blended Learning Ch. 1 (Routledge, 2020).

Maloy, R. W., Trust, T. & Edwards, S. A. Variety is the spice of remote learning. Medium https://go.nature.com/34Y1NxI (24 August 2020).

Lockee, B. J. Appl. Instructional Des . https://go.nature.com/3b0ddoC (2020).

Dunlap, J. & Lowenthal, P. Open Praxis 10 , 79–89 (2018).

Johnson, N., Veletsianos, G. & Seaman, J. Online Learn. 24 , 6–21 (2020).

Vaughan, N. D., Cleveland-Innes, M. & Garrison, D. R. Assessment in Teaching in Blended Learning Environments: Creating and Sustaining Communities of Inquiry (Athabasca Univ. Press, 2013).

Conrad, D. & Openo, J. Assessment Strategies for Online Learning: Engagement and Authenticity (Athabasca Univ. Press, 2018).

Download references

Author information

Authors and affiliations.

School of Education, Virginia Tech, Blacksburg, VA, USA

Barbara B. Lockee

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Barbara B. Lockee .

Ethics declarations

Competing interests.

The author declares no competing interests.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Lockee, B.B. Online education in the post-COVID era. Nat Electron 4 , 5–6 (2021). https://doi.org/10.1038/s41928-020-00534-0

Download citation

Published : 25 January 2021

Issue Date : January 2021

DOI : https://doi.org/10.1038/s41928-020-00534-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

A comparative study on the effectiveness of online and in-class team-based learning on student performance and perceptions in virtual simulation experiments.

BMC Medical Education (2024)

Enhancing learner affective engagement: The impact of instructor emotional expressions and vocal charisma in asynchronous video-based online learning

- Hung-Yue Suen

- Kuo-En Hung

Education and Information Technologies (2024)

Development and validation of the antecedents to videoconference fatigue scale in higher education (AVFS-HE)

- Benjamin J. Li

- Andrew Z. H. Yee

Leveraging privacy profiles to empower users in the digital society

- Davide Di Ruscio

- Paola Inverardi

- Phuong T. Nguyen

Automated Software Engineering (2024)

Global public concern of childhood and adolescence suicide: a new perspective and new strategies for suicide prevention in the post-pandemic era

- Dong Keon Yon

World Journal of Pediatrics (2024)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

The effects of online education on academic success: A meta-analysis study

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Received 2020 Dec 6; Accepted 2021 Aug 30; Issue date 2022.

This article is made available via the PMC Open Access Subset for unrestricted research re-use and secondary analysis in any form or by any means with acknowledgement of the original source. These permissions are granted for the duration of the World Health Organization (WHO) declaration of COVID-19 as a global pandemic.

The purpose of this study is to analyze the effect of online education, which has been extensively used on student achievement since the beginning of the pandemic. In line with this purpose, a meta-analysis of the related studies focusing on the effect of online education on students’ academic achievement in several countries between the years 2010 and 2021 was carried out. Furthermore, this study will provide a source to assist future studies with comparing the effect of online education on academic achievement before and after the pandemic. This meta-analysis study consists of 27 studies in total. The meta-analysis involves the studies conducted in the USA, Taiwan, Turkey, China, Philippines, Ireland, and Georgia. The studies included in the meta-analysis are experimental studies, and the total sample size is 1772. In the study, the funnel plot, Duval and Tweedie’s Trip and Fill Analysis, Orwin’s Safe N Analysis, and Egger’s Regression Test were utilized to determine the publication bias, which has been found to be quite low. Besides, Hedge’s g statistic was employed to measure the effect size for the difference between the means performed in accordance with the random effects model. The results of the study show that the effect size of online education on academic achievement is on a medium level. The heterogeneity test results of the meta-analysis study display that the effect size does not differ in terms of class level, country, online education approaches, and lecture moderators.

Keywords: Online education, Student achievement, Academic success, Meta-analysis

Introduction

Information and communication technologies have become a powerful force in transforming the educational settings around the world. The pandemic has been an important factor in transferring traditional physical classrooms settings through adopting information and communication technologies and has also accelerated the transformation. The literature supports that learning environments connected to information and communication technologies highly satisfy students. Therefore, we need to keep interest in technology-based learning environments. Clearly, technology has had a huge impact on young people's online lives. This digital revolution can synergize the educational ambitions and interests of digitally addicted students. In essence, COVID-19 has provided us with an opportunity to embrace online learning as education systems have to keep up with the rapid emergence of new technologies.

Information and communication technologies that have an effect on all spheres of life are also actively included in the education field. With the recent developments, using technology in education has become inevitable due to personal and social reasons (Usta, 2011a ). Online education may be given as an example of using information and communication technologies as a consequence of the technological developments. Also, it is crystal clear that online learning is a popular way of obtaining instruction (Demiralay et al., 2016 ; Pillay et al., 2007 ), which is defined by Horton ( 2000 ) as a way of education that is performed through a web browser or an online application without requiring an extra software or a learning source. Furthermore, online learning is described as a way of utilizing the internet to obtain the related learning sources during the learning process, to interact with the content, the teacher, and other learners, as well as to get support throughout the learning process (Ally, 2004 ). Online learning has such benefits as learning independently at any time and place (Vrasidas & MsIsaac, 2000 ), granting facility (Poole, 2000 ), flexibility (Chizmar & Walbert, 1999 ), self-regulation skills (Usta, 2011b ), learning with collaboration, and opportunity to plan self-learning process.

Even though online education practices have not been comprehensive as it is now, internet and computers have been used in education as alternative learning tools in correlation with the advances in technology. The first distance education attempt in the world was initiated by the ‘Steno Courses’ announcement published in Boston newspaper in 1728. Furthermore, in the nineteenth century, Sweden University started the “Correspondence Composition Courses” for women, and University Correspondence College was afterwards founded for the correspondence courses in 1843 (Arat & Bakan, 2011 ). Recently, distance education has been performed through computers, assisted by the facilities of the internet technologies, and soon, it has evolved into a mobile education practice that is emanating from progress in the speed of internet connection, and the development of mobile devices.

With the emergence of pandemic (Covid-19), face to face education has almost been put to a halt, and online education has gained significant importance. The Microsoft management team declared to have 750 users involved in the online education activities on the 10 th March, just before the pandemic; however, on March 24, they informed that the number of users increased significantly, reaching the number of 138,698 users (OECD, 2020 ). This event supports the view that it is better to commonly use online education rather than using it as a traditional alternative educational tool when students do not have the opportunity to have a face to face education (Geostat, 2019 ). The period of Covid-19 pandemic has emerged as a sudden state of having limited opportunities. Face to face education has stopped in this period for a long time. The global spread of Covid-19 affected more than 850 million students all around the world, and it caused the suspension of face to face education. Different countries have proposed several solutions in order to maintain the education process during the pandemic. Schools have had to change their curriculum, and many countries supported the online education practices soon after the pandemic. In other words, traditional education gave its way to online education practices. At least 96 countries have been motivated to access online libraries, TV broadcasts, instructions, sources, video lectures, and online channels (UNESCO, 2020 ). In such a painful period, educational institutions went through online education practices by the help of huge companies such as Microsoft, Google, Zoom, Skype, FaceTime, and Slack. Thus, online education has been discussed in the education agenda more intensively than ever before.

Although online education approaches were not used as comprehensively as it has been used recently, it was utilized as an alternative learning approach in education for a long time in parallel with the development of technology, internet and computers. The academic achievement of the students is often aimed to be promoted by employing online education approaches. In this regard, academicians in various countries have conducted many studies on the evaluation of online education approaches and published the related results. However, the accumulation of scientific data on online education approaches creates difficulties in keeping, organizing and synthesizing the findings. In this research area, studies are being conducted at an increasing rate making it difficult for scientists to be aware of all the research outside of their expertise. Another problem encountered in the related study area is that online education studies are repetitive. Studies often utilize slightly different methods, measures, and/or examples to avoid duplication. This erroneous approach makes it difficult to distinguish between significant differences in the related results. In other words, if there are significant differences in the results of the studies, it may be difficult to express what variety explains the differences in these results. One obvious solution to these problems is to systematically review the results of various studies and uncover the sources. One method of performing such systematic syntheses is the application of meta-analysis which is a methodological and statistical approach to draw conclusions from the literature. At this point, how effective online education applications are in increasing the academic success is an important detail. Has online education, which is likely to be encountered frequently in the continuing pandemic period, been successful in the last ten years? If successful, how much was the impact? Did different variables have an impact on this effect? Academics across the globe have carried out studies on the evaluation of online education platforms and publishing the related results (Chiao et al., 2018 ). It is quite important to evaluate the results of the studies that have been published up until now, and that will be published in the future. Has the online education been successful? If it has been, how big is the impact? Do the different variables affect this impact? What should we consider in the next coming online education practices? These questions have all motivated us to carry out this study. We have conducted a comprehensive meta-analysis study that tries to provide a discussion platform on how to develop efficient online programs for educators and policy makers by reviewing the related studies on online education, presenting the effect size, and revealing the effect of diverse variables on the general impact.

There have been many critical discussions and comprehensive studies on the differences between online and face to face learning; however, the focus of this paper is different in the sense that it clarifies the magnitude of the effect of online education and teaching process, and it represents what factors should be controlled to help increase the effect size. Indeed, the purpose here is to provide conscious decisions in the implementation of the online education process.

The general impact of online education on the academic achievement will be discovered in the study. Therefore, this will provide an opportunity to get a general overview of the online education which has been practiced and discussed intensively in the pandemic period. Moreover, the general impact of online education on academic achievement will be analyzed, considering different variables. In other words, the current study will allow to totally evaluate the study results from the related literature, and to analyze the results considering several cultures, lectures, and class levels. Considering all the related points, this study seeks to answer the following research questions:

What is the effect size of online education on academic achievement?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the country?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the class level?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the lecture?

How do the effect sizes of online education on academic achievement change according to the moderator variable of the online education approaches?

This study aims at determining the effect size of online education, which has been highly used since the beginning of the pandemic, on students’ academic achievement in different courses by using a meta-analysis method. Meta-analysis is a synthesis method that enables gathering of several study results accurately and efficiently, and getting the total results in the end (Tsagris & Fragkos, 2018 ).

Selecting and coding the data (studies)

The required literature for the meta-analysis study was reviewed in July, 2020, and the follow-up review was conducted in September, 2020. The purpose of the follow-up review was to include the studies which were published in the conduction period of this study, and which met the related inclusion criteria. However, no study was encountered to be included in the follow-up review.

In order to access the studies in the meta-analysis, the databases of Web of Science, ERIC, and SCOPUS were reviewed by utilizing the keywords ‘online learning and online education’. Not every database has a search engine that grants access to the studies by writing the keywords, and this obstacle was considered to be an important problem to be overcome. Therefore, a platform that has a special design was utilized by the researcher. With this purpose, through the open access system of Cukurova University Library, detailed reviews were practiced using EBSCO Information Services (EBSCO) that allow reviewing the whole collection of research through a sole searching box. Since the fundamental variables of this study are online education and online learning, the literature was systematically reviewed in the related databases (Web of Science, ERIC, and SCOPUS) by referring to the keywords. Within this scope, 225 articles were accessed, and the studies were included in the coding key list formed by the researcher. The name of the researchers, the year, the database (Web of Science, ERIC, and SCOPUS), the sample group and size, the lectures that the academic achievement was tested in, the country that the study was conducted in, and the class levels were all included in this coding key.

The following criteria were identified to include 225 research studies which were coded based on the theoretical basis of the meta-analysis study: (1) The studies should be published in the refereed journals between the years 2020 and 2021, (2) The studies should be experimental studies that try to determine the effect of online education and online learning on academic achievement, (3) The values of the stated variables or the required statistics to calculate these values should be stated in the results of the studies, and (4) The sample group of the study should be at a primary education level. These criteria were also used as the exclusion criteria in the sense that the studies that do not meet the required criteria were not included in the present study.

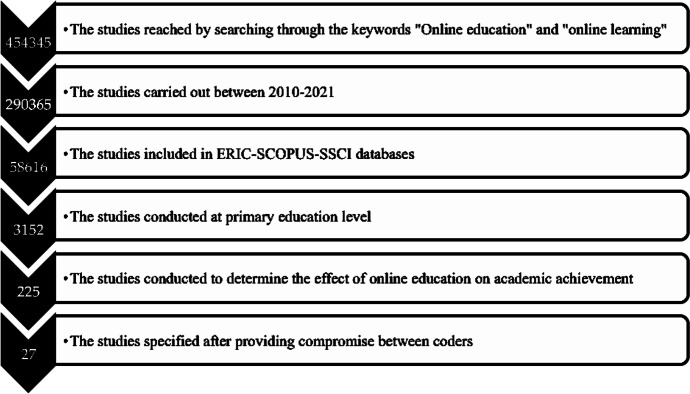

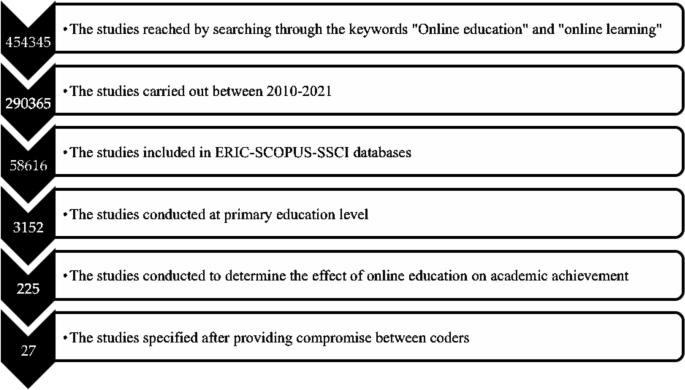

After the inclusion criteria were determined, a systematic review process was conducted, following the year criterion of the study by means of EBSCO. Within this scope, 290,365 studies that analyze the effect of online education and online learning on academic achievement were accordingly accessed. The database (Web of Science, ERIC, and SCOPUS) was also used as a filter by analyzing the inclusion criteria. Hence, the number of the studies that were analyzed was 58,616. Afterwards, the keyword ‘primary education’ was used as the filter and the number of studies included in the study decreased to 3152. Lastly, the literature was reviewed by using the keyword ‘academic achievement’ and 225 studies were accessed. All the information of 225 articles was included in the coding key.

It is necessary for the coders to review the related studies accurately and control the validity, safety, and accuracy of the studies (Stewart & Kamins, 2001 ). Within this scope, the studies that were determined based on the variables used in this study were first reviewed by three researchers from primary education field, then the accessed studies were combined and processed in the coding key by the researcher. All these studies that were processed in the coding key were analyzed in accordance with the inclusion criteria by all the researchers in the meetings, and it was decided that 27 studies met the inclusion criteria (Atici & Polat, 2010 ; Carreon, 2018 ; Ceylan & Elitok Kesici, 2017 ; Chae & Shin, 2016 ; Chiang et al. 2014 ; Ercan, 2014 ; Ercan et al., 2016 ; Gwo-Jen et al., 2018 ; Hayes & Stewart, 2016 ; Hwang et al., 2012 ; Kert et al., 2017 ; Lai & Chen, 2010 ; Lai et al., 2015 ; Meyers et al., 2015 ; Ravenel et al., 2014 ; Sung et al., 2016 ; Wang & Chen, 2013 ; Yu, 2019 ; Yu & Chen, 2014 ; Yu & Pan, 2014 ; Yu et al., 2010 ; Zhong et al., 2017 ). The data from the studies meeting the inclusion criteria were independently processed in the second coding key by three researchers, and consensus meetings were arranged for further discussion. After the meetings, researchers came to an agreement that the data were coded accurately and precisely. Having identified the effect sizes and heterogeneity of the study, moderator variables that will show the differences between the effect sizes were determined. The data related to the determined moderator variables were added to the coding key by three researchers, and a new consensus meeting was arranged. After the meeting, researchers came to an agreement that moderator variables were coded accurately and precisely.

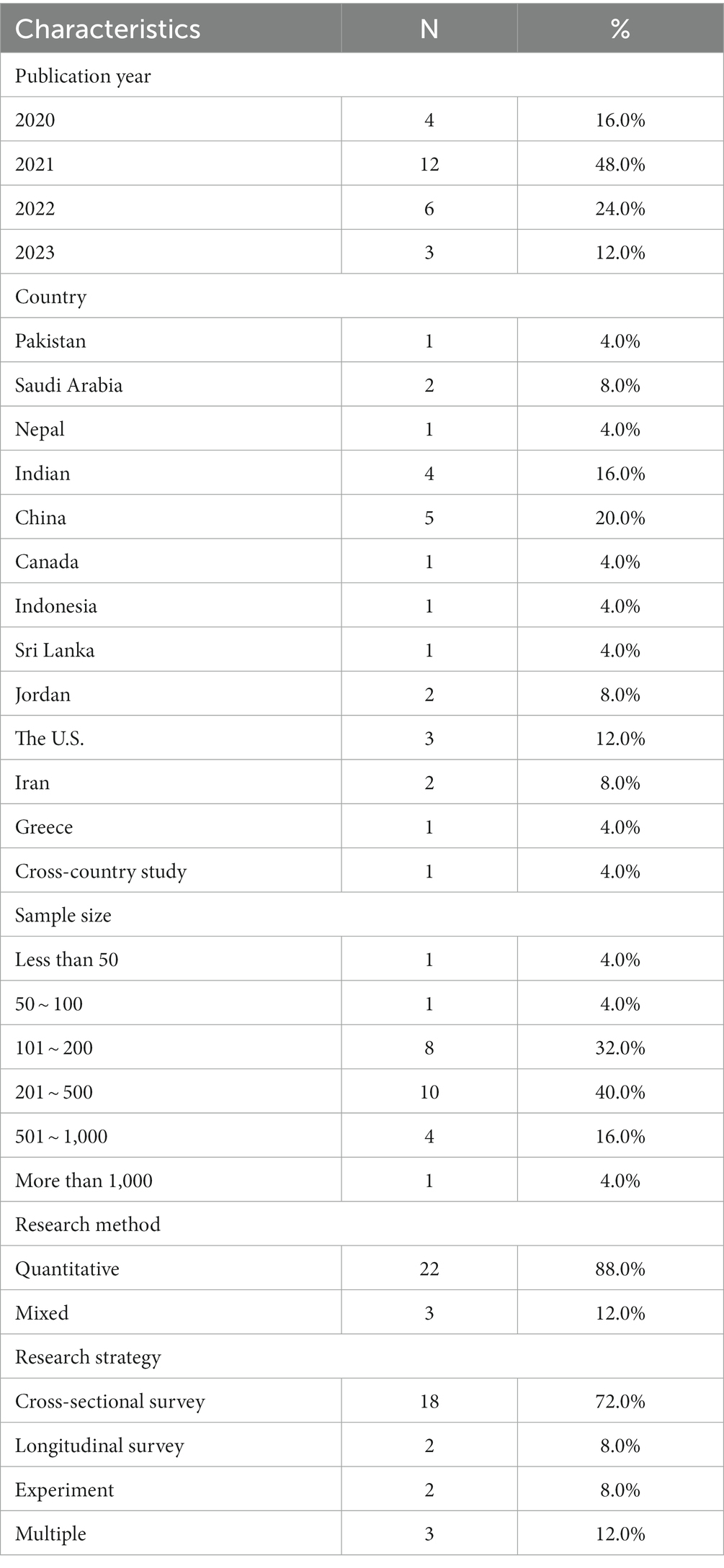

Study group

27 studies are included in the meta-analysis. The total sample size of the studies that are included in the analysis is 1772. The characteristics of the studies included are given in Table 1 .

The characteristics of the studies included in the meta-analysis

Publication bias

Publication bias is the low capability of published studies on a research subject to represent all completed studies on the same subject (Card, 2011 ; Littell et al., 2008 ). Similarly, publication bias is the state of having a relationship between the probability of the publication of a study on a subject, and the effect size and significance that it produces. Within this scope, publication bias may occur when the researchers do not want to publish the study as a result of failing to obtain the expected results, or not being approved by the scientific journals, and consequently not being included in the study synthesis (Makowski et al., 2019 ). The high possibility of publication bias in a meta-analysis study negatively affects (Pecoraro, 2018 ) the accuracy of the combined effect size, causing the average effect size to be reported differently than it should be (Borenstein et al., 2009 ). For this reason, the possibility of publication bias in the included studies was tested before determining the effect sizes of the relationships between the stated variables. The possibility of publication bias of this meta-analysis study was analyzed by using the funnel plot, Orwin’s Safe N Analysis, Duval and Tweedie’s Trip and Fill Analysis, and Egger’s Regression Test.

Selecting the model

After determining the probability of publication bias of this meta-analysis study, the statistical model used to calculate the effect sizes was selected. The main approaches used in the effect size calculations according to the differentiation level of inter-study variance are fixed and random effects models (Pigott, 2012 ). Fixed effects model refers to the homogeneity of the characteristics of combined studies apart from the sample sizes, while random effects model refers to the parameter diversity between the studies (Cumming, 2012 ). While calculating the average effect size in the random effects model (Deeks et al., 2008 ) that is based on the assumption that effect predictions of different studies are only the result of a similar distribution, it is necessary to consider several situations such as the effect size apart from the sample error of combined studies, characteristics of the participants, duration, scope, and pattern of the study (Littell et al., 2008 ). While deciding the model in the meta-analysis study, the assumptions on the sample characteristics of the studies included in the analysis and the inferences that the researcher aims to make should be taken into consideration. The fact that the sample characteristics of the studies conducted in the field of social sciences are affected by various parameters shows that using random effects model is more appropriate in this sense. Besides, it is stated that the inferences made with the random effects model are beyond the studies included in the meta-analysis (Field, 2003 ; Field & Gillett, 2010 ). Therefore, using random effects model also contributes to the generalization of research data. The specified criteria for the statistical model selection show that according to the nature of the meta-analysis study, the model should be selected just before the analysis (Borenstein et al., 2007 ; Littell et al., 2008 ). Within this framework, it was decided to make use of the random effects model, considering that the students who are the samples of the studies included in the meta-analysis are from different countries and cultures, the sample characteristics of the studies differ, and the patterns and scopes of the studies vary as well.

Heterogeneity

Meta-analysis facilitates analyzing the research subject with different parameters by showing the level of diversity between the included studies. Within this frame, whether there is a heterogeneous distribution between the studies included in the study or not has been evaluated in the present study. The heterogeneity of the studies combined in this meta-analysis study has been determined through Q and I 2 tests. Q test evaluates the random distribution probability of the differences between the observed results (Deeks et al., 2008 ). Q value exceeding 2 value calculated according to the degree of freedom and significance, indicates the heterogeneity of the combined effect sizes (Card, 2011 ). I 2 test, which is the complementary of the Q test, shows the heterogeneity amount of the effect sizes (Cleophas & Zwinderman, 2017 ). I 2 value being higher than 75% is explained as high level of heterogeneity.

In case of encountering heterogeneity in the studies included in the meta-analysis, the reasons of heterogeneity can be analyzed by referring to the study characteristics. The study characteristics which may be related to the heterogeneity between the included studies can be interpreted through subgroup analysis or meta-regression analysis (Deeks et al., 2008 ). While determining the moderator variables, the sufficiency of the number of variables, the relationship between the moderators, and the condition to explain the differences between the results of the studies have all been considered in the present study. Within this scope, it was predicted in this meta-analysis study that the heterogeneity can be explained with the country, class level, and lecture moderator variables of the study in terms of the effect of online education, which has been highly used since the beginning of the pandemic, and it has an impact on the students’ academic achievement in different lectures. Some subgroups were evaluated and categorized together, considering that the number of effect sizes of the sub-dimensions of the specified variables is not sufficient to perform moderator analysis (e.g. the countries where the studies were conducted).

Interpreting the effect sizes

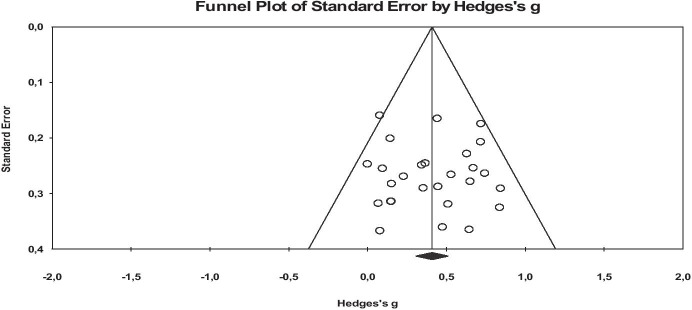

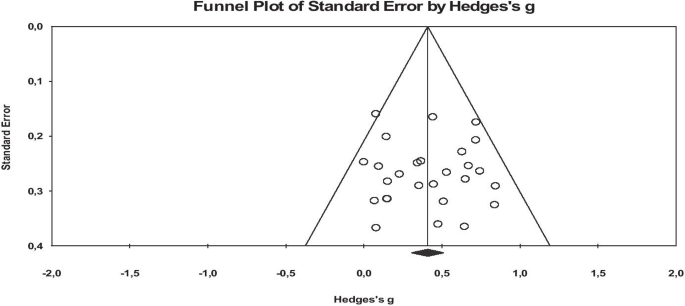

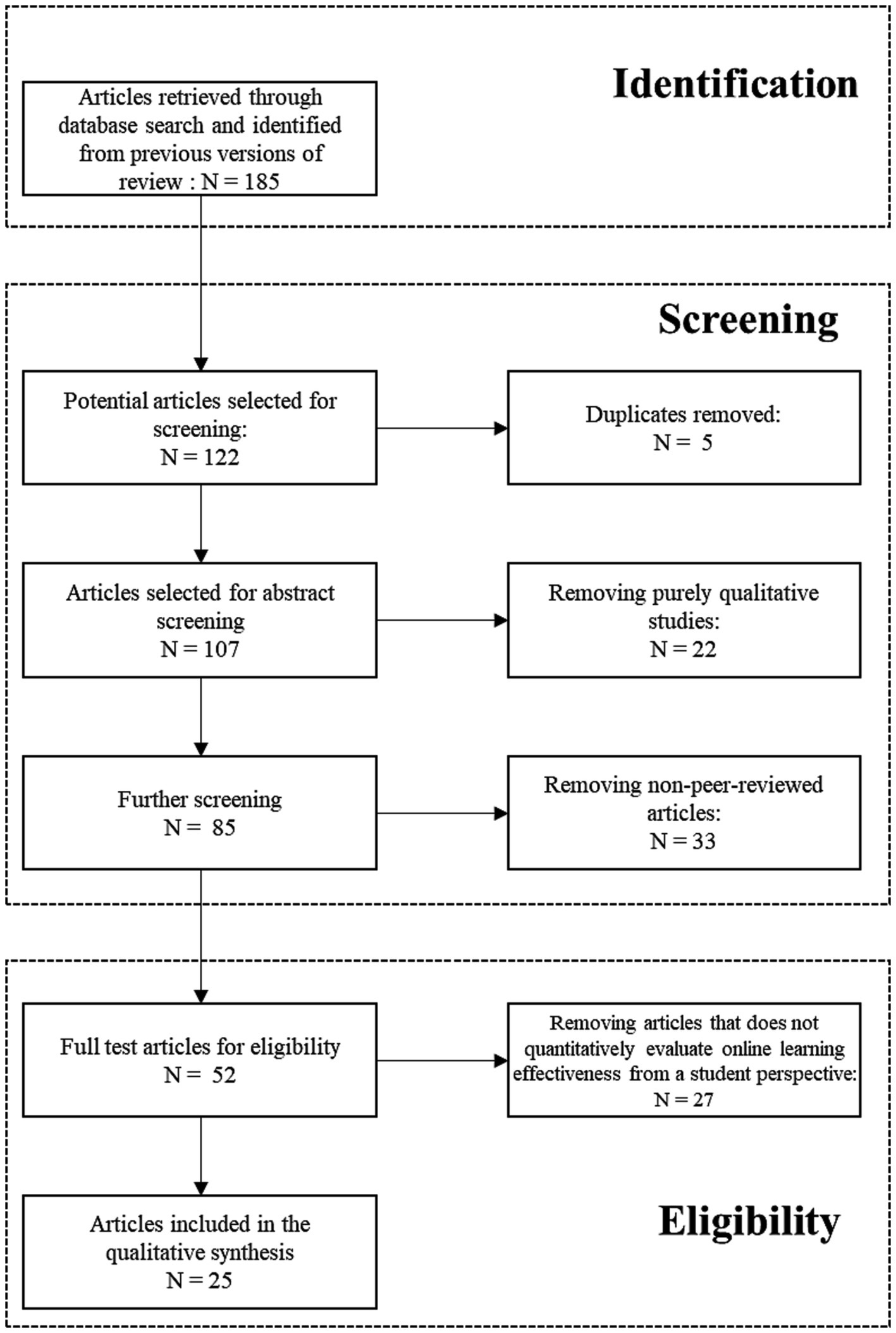

Effect size is a factor that shows how much the independent variable affects the dependent variable positively or negatively in each included study in the meta-analysis (Dinçer, 2014 ). While interpreting the effect sizes obtained from the meta-analysis, the classifications of Cohen et al. ( 2007 ) have been utilized. The case of differentiating the specified relationships of the situation of the country, class level, and school subject variables of the study has been identified through the Q test, degree of freedom, and p significance value Fig. 1 and 2 .

The flow chart of the scanning and selection process of the studies

Funnel plot graphics representing the effect size of the effects of online education on academic success

Findings and results

The purpose of this study is to determine the effect size of online education on academic achievement. Before determining the effect sizes in the study, the probability of publication bias of this meta-analysis study was analyzed by using the funnel plot, Orwin’s Safe N Analysis, Duval and Tweedie’s Trip and Fill Analysis, and Egger’s Regression Test.

When the funnel plots are examined, it is seen that the studies included in the analysis are distributed symmetrically on both sides of the combined effect size axis, and they are generally collected in the middle and lower sections. The probability of publication bias is low according to the plots. However, since the results of the funnel scatter plots may cause subjective interpretations, they have been supported by additional analyses (Littell et al., 2008 ). Therefore, in order to provide an extra proof for the probability of publication bias, it has been analyzed through Orwin’s Safe N Analysis, Duval and Tweedie’s Trip and Fill Analysis, and Egger’s Regression Test (Table 2 ).

Reliability tests results representing the probability of publication bias

* Represents the required number of papers for Hedges g co-efficiency to reach a rate out of 0.01 range

Table 2 consists of the results of the rates of publication bias probability before counting the effect size of online education on academic achievement. According to the table, Orwin Safe N analysis results show that it is not necessary to add new studies to the meta-analysis in order for Hedges g to reach a value outside the range of ± 0.01. The Duval and Tweedie test shows that excluding the studies that negatively affect the symmetry of the funnel scatter plots for each meta-analysis or adding their exact symmetrical equivalents does not significantly differentiate the calculated effect size. The insignificance of the Egger tests results reveals that there is no publication bias in the meta-analysis study. The results of the analysis indicate the high internal validity of the effect sizes and the adequacy of representing the studies conducted on the relevant subject.

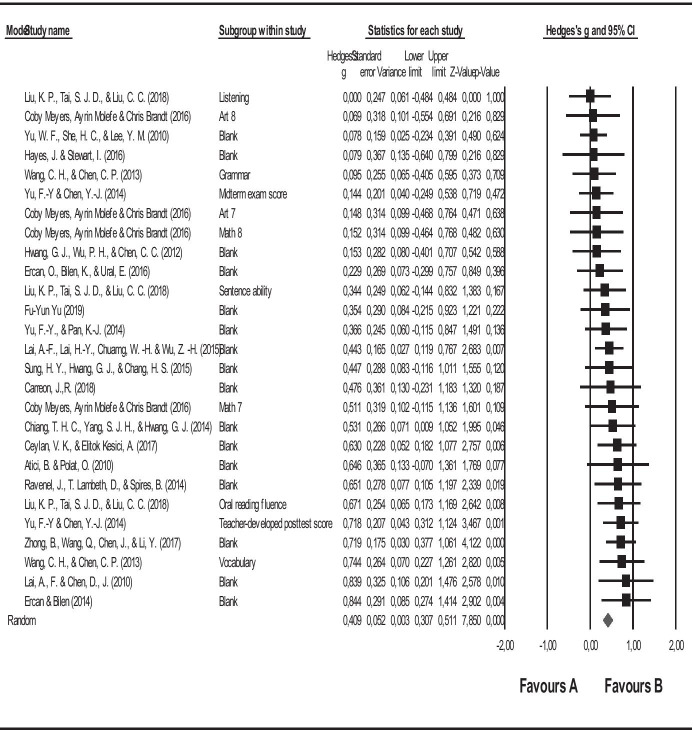

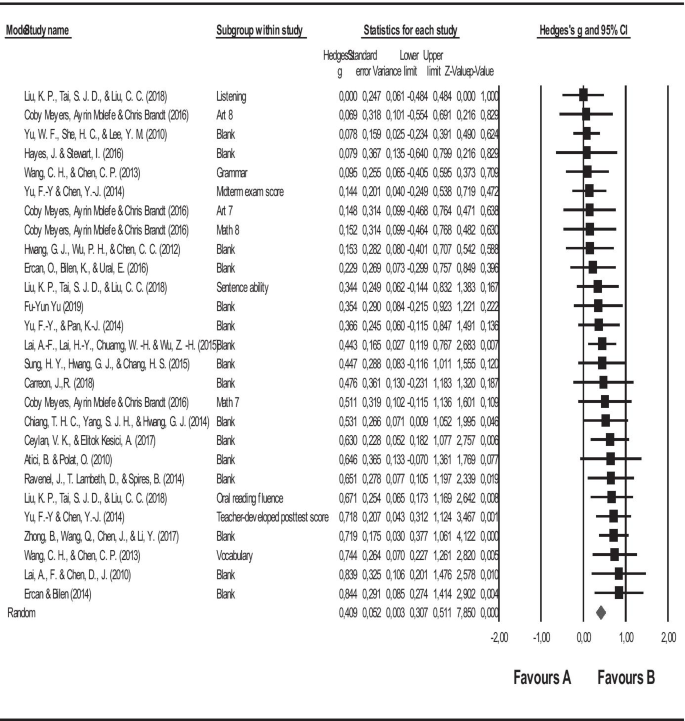

In this study, it was aimed to determine the effect size of online education on academic achievement after testing the publication bias. In line with the first purpose of the study, the forest graph regarding the effect size of online education on academic achievement is shown in Fig. 3 , and the statistics regarding the effect size are given in Table 3 .

Forest graph related to the effect size of online education on academic success

The findings related to the effect size of online education on academic success

n: the Number of Studies included in Meta-Analysis; Hedges g: average effect size

p: significance level of the effect size; S error : standard error; EB low – EB up : lower and upper limits of the effect size

The square symbols in the forest graph in Fig. 3 represent the effect sizes, while the horizontal lines show the intervals in 95% confidence of the effect sizes, and the diamond symbol shows the overall effect size. When the forest graph is analyzed, it is seen that the lower and upper limits of the combined effect sizes are generally close to each other, and the study loads are similar. This similarity in terms of study loads indicates the similarity of the contribution of the combined studies to the overall effect size.

Figure 3 clearly represents that the study of Liu and others (Liu et al., 2018 ) has the lowest, and the study of Ercan and Bilen ( 2014 ) has the highest effect sizes. The forest graph shows that all the combined studies and the overall effect are positive. Furthermore, it is simply understood from the forest graph in Fig. 3 and the effect size statistics in Table 3 that the results of the meta-analysis study conducted with 27 studies and analyzing the effect of online education on academic achievement illustrate that this relationship is on average level (= 0.409).

After the analysis of the effect size in the study, whether the studies included in the analysis are distributed heterogeneously or not has also been analyzed. The heterogeneity of the combined studies was determined through the Q and I 2 tests. As a result of the heterogeneity test, Q statistical value was calculated as 29.576. With 26 degrees of freedom at 95% significance level in the chi-square table, the critical value is accepted as 38.885. The Q statistical value (29.576) counted in this study is lower than the critical value of 38.885. The I 2 value, which is the complementary of the Q statistics, is 12.100%. This value indicates that the accurate heterogeneity or the total variability that can be attributed to variability between the studies is 12%. Besides, p value is higher than (0.285) p = 0.05. All these values [Q (26) = 29.579, p = 0.285; I2 = 12.100] indicate that there is a homogeneous distribution between the effect sizes, and fixed effects model should be used to interpret these effect sizes. However, some researchers argue that even if the heterogeneity is low, it should be evaluated based on the random effects model (Borenstein et al., 2007 ). Therefore, this study gives information about both models. The heterogeneity of the combined studies has been attempted to be explained with the characteristics of the studies included in the analysis. In this context, the final purpose of the study is to determine the effect of the country, academic level, and year variables on the findings. Accordingly, the statistics regarding the comparison of the stated relations according to the countries where the studies were conducted are given in Table 4 .

The dispersion of the studies according to the countries and the heterogeneity test results

As seen in Table 4 , the effect of online education on academic achievement does not differ significantly according to the countries where the studies were conducted in. Q test results indicate the heterogeneity of the relationships between the variables in terms of countries where the studies were conducted in. According to the table, the effect of online education on academic achievement was reported as the highest in other countries, and the lowest in the US. The statistics regarding the comparison of the stated relations according to the class levels are given in Table 5 .

The dispersion of the studies according to the class level and the heterogeneity test results

As seen in Table 5 , the effect of online education on academic achievement does not differ according to the class level. However, the effect of online education on academic achievement is the highest in the 4 th class. The statistics regarding the comparison of the stated relations according to the class levels are given in Table 6 .

The dispersion of the studies according to the school subjects and the heterogeneity test results

As seen in Table 6 , the effect of online education on academic achievement does not differ according to the school subjects included in the studies. However, the effect of online education on academic achievement is the highest in ICT subject.

The obtained effect size in the study was formed as a result of the findings attained from primary studies conducted in 7 different countries. In addition, these studies are the ones on different approaches to online education (online learning environments, social networks, blended learning, etc.). In this respect, the results may raise some questions about the validity and generalizability of the results of the study. However, the moderator analyzes, whether for the country variable or for the approaches covered by online education, did not create significant differences in terms of the effect sizes. If significant differences were to occur in terms of effect sizes, we could say that the comparisons we will make by comparing countries under the umbrella of online education would raise doubts in terms of generalizability. Moreover, no study has been found in the literature that is not based on a special approach or does not contain a specific technique conducted under the name of online education alone. For instance, one of the commonly used definitions is blended education which is defined as an educational model in which online education is combined with traditional education method (Colis & Moonen, 2001 ). Similarly, Rasmussen ( 2003 ) defines blended learning as “a distance education method that combines technology (high technology such as television, internet, or low technology such as voice e-mail, conferences) with traditional education and training.” Further, Kerres and Witt (2003) define blended learning as “combining face-to-face learning with technology-assisted learning.” As it is clearly observed, online education, which has a wider scope, includes many approaches.

As seen in Table 7 , the effect of online education on academic achievement does not differ according to online education approaches included in the studies. However, the effect of online education on academic achievement is the highest in Web Based Problem Solving Approach.

The dispersion of the studies according to the online education approaches and the heterogeneity test results

Conclusions and discussion

Considering the developments during the pandemics, it is thought that the diversity in online education applications as an interdisciplinary pragmatist field will increase, and the learning content and processes will be enriched with the integration of new technologies into online education processes. Another prediction is that more flexible and accessible learning opportunities will be created in online education processes, and in this way, lifelong learning processes will be strengthened. As a result, it is predicted that in the near future, online education and even digital learning with a newer name will turn into the main ground of education instead of being an alternative or having a support function in face-to-face learning. The lessons learned from the early period online learning experience, which was passed with rapid adaptation due to the Covid19 epidemic, will serve to develop this method all over the world, and in the near future, online learning will become the main learning structure through increasing its functionality with the contribution of new technologies and systems. If we look at it from this point of view, there is a necessity to strengthen online education.

In this study, the effect of online learning on academic achievement is at a moderate level. To increase this effect, the implementation of online learning requires support from teachers to prepare learning materials, to design learning appropriately, and to utilize various digital-based media such as websites, software technology and various other tools to support the effectiveness of online learning (Rolisca & Achadiyah, 2014 ). According to research conducted by Rahayu et al. ( 2017 ), it has been proven that the use of various types of software increases the effectiveness and quality of online learning. Implementation of online learning can affect students' ability to adapt to technological developments in that it makes students use various learning resources on the internet to access various types of information, and enables them to get used to performing inquiry learning and active learning (Hart et al., 2019 ; Prestiadi et al., 2019 ). In addition, there may be many reasons for the low level of effect in this study. The moderator variables examined in this study could be a guide in increasing the level of practical effect. However, the effect size did not differ significantly for all moderator variables. Different moderator analyzes can be evaluated in order to increase the level of impact of online education on academic success. If confounding variables that significantly change the effect level are detected, it can be spoken more precisely in order to increase this level. In addition to the technical and financial problems, the level of impact will increase if a few other difficulties are eliminated such as students, lack of interaction with the instructor, response time, and lack of traditional classroom socialization.

In addition, COVID-19 pandemic related social distancing has posed extreme difficulties for all stakeholders to get online as they have to work in time constraints and resource constraints. Adopting the online learning environment is not just a technical issue, it is a pedagogical and instructive challenge as well. Therefore, extensive preparation of teaching materials, curriculum, and assessment is vital in online education. Technology is the delivery tool and requires close cross-collaboration between teaching, content and technology teams (CoSN, 2020 ).

Online education applications have been used for many years. However, it has come to the fore more during the pandemic process. This result of necessity has brought with it the discussion of using online education instead of traditional education methods in the future. However, with this research, it has been revealed that online education applications are moderately effective. The use of online education instead of face-to-face education applications can only be possible with an increase in the level of success. This may have been possible with the experience and knowledge gained during the pandemic process. Therefore, the meta-analysis of experimental studies conducted in the coming years will guide us. In this context, experimental studies using online education applications should be analyzed well. It would be useful to identify variables that can change the level of impacts with different moderators. Moderator analyzes are valuable in meta-analysis studies (for example, the role of moderators in Karl Pearson's typhoid vaccine studies). In this context, each analysis study sheds light on future studies. In meta-analyses to be made about online education, it would be beneficial to go beyond the moderators determined in this study. Thus, the contribution of similar studies to the field will increase more.

The purpose of this study is to determine the effect of online education on academic achievement. In line with this purpose, the studies that analyze the effect of online education approaches on academic achievement have been included in the meta-analysis. The total sample size of the studies included in the meta-analysis is 1772. While the studies included in the meta-analysis were conducted in the US, Taiwan, Turkey, China, Philippines, Ireland, and Georgia, the studies carried out in Europe could not be reached. The reason may be attributed to that there may be more use of quantitative research methods from a positivist perspective in the countries with an American academic tradition. As a result of the study, it was found out that the effect size of online education on academic achievement (g = 0.409) was moderate. In the studies included in the present research, we found that online education approaches were more effective than traditional ones. However, contrary to the present study, the analysis of comparisons between online and traditional education in some studies shows that face-to-face traditional learning is still considered effective compared to online learning (Ahmad et al., 2016 ; Hamdani & Priatna, 2020 ; Wei & Chou, 2020 ). Online education has advantages and disadvantages. The advantages of online learning compared to face-to-face learning in the classroom is the flexibility of learning time in online learning, the learning time does not include a single program, and it can be shaped according to circumstances (Lai et al., 2019 ). The next advantage is the ease of collecting assignments for students, as these can be done without having to talk to the teacher. Despite this, online education has several weaknesses, such as students having difficulty in understanding the material, teachers' inability to control students, and students’ still having difficulty interacting with teachers in case of internet network cuts (Swan, 2007 ). According to Astuti et al ( 2019 ), face-to-face education method is still considered better by students than e-learning because it is easier to understand the material and easier to interact with teachers. The results of the study illustrated that the effect size (g = 0.409) of online education on academic achievement is of medium level. Therefore, the results of the moderator analysis showed that the effect of online education on academic achievement does not differ in terms of country, lecture, class level, and online education approaches variables. After analyzing the literature, several meta-analyses on online education were published (Bernard et al., 2004 ; Machtmes & Asher, 2000 ; Zhao et al., 2005 ). Typically, these meta-analyzes also include the studies of older generation technologies such as audio, video, or satellite transmission. One of the most comprehensive studies on online education was conducted by Bernard et al. ( 2004 ). In this study, 699 independent effect sizes of 232 studies published from 1985 to 2001 were analyzed, and face-to-face education was compared to online education, with respect to success criteria and attitudes of various learners from young children to adults. In this meta-analysis, an overall effect size close to zero was found for the students' achievement (g + = 0.01).

In another meta-analysis study carried out by Zhao et al. ( 2005 ), 98 effect sizes were examined, including 51 studies on online education conducted between 1996 and 2002. According to the study of Bernard et al. ( 2004 ), this meta-analysis focuses on the activities done in online education lectures. As a result of the research, an overall effect size close to zero was found for online education utilizing more than one generation technology for students at different levels. However, the salient point of the meta-analysis study of Zhao et al. is that it takes the average of different types of results used in a study to calculate an overall effect size. This practice is problematic because the factors that develop one type of learner outcome (e.g. learner rehabilitation), particularly course characteristics and practices, may be quite different from those that develop another type of outcome (e.g. learner's achievement), and it may even cause damage to the latter outcome. While mixing the studies with different types of results, this implementation may obscure the relationship between practices and learning.

Some meta-analytical studies have focused on the effectiveness of the new generation distance learning courses accessed through the internet for specific student populations. For instance, Sitzmann and others (Sitzmann et al., 2006 ) reviewed 96 studies published from 1996 to 2005, comparing web-based education of job-related knowledge or skills with face-to-face one. The researchers found that web-based education in general was slightly more effective than face-to-face education, but it is insufficient in terms of applicability ("knowing how to apply"). In addition, Sitzmann et al. ( 2006 ) revealed that Internet-based education has a positive effect on theoretical knowledge in quasi-experimental studies; however, it positively affects face-to-face education in experimental studies performed by random assignment. This moderator analysis emphasizes the need to pay attention to the factors of designs of the studies included in the meta-analysis. The designs of the studies included in this meta-analysis study were ignored. This can be presented as a suggestion to the new studies that will be conducted.

Another meta-analysis study was conducted by Cavanaugh et al. ( 2004 ), in which they focused on online education. In this study on internet-based distance education programs for students under 12 years of age, the researchers combined 116 results from 14 studies published between 1999 and 2004 to calculate an overall effect that was not statistically different from zero. The moderator analysis carried out in this study showed that there was no significant factor affecting the students' success. This meta-analysis used multiple results of the same study, ignoring the fact that different results of the same student would not be independent from each other.

In conclusion, some meta-analytical studies analyzed the consequences of online education for a wide range of students (Bernard et al., 2004 ; Zhao et al., 2005 ), and the effect sizes were generally low in these studies. Furthermore, none of the large-scale meta-analyzes considered the moderators, database quality standards or class levels in the selection of the studies, while some of them just referred to the country and lecture moderators. Advances in internet-based learning tools, the pandemic process, and increasing popularity in different learning contexts have required a precise meta-analysis of students' learning outcomes through online learning. Previous meta-analysis studies were typically based on the studies, involving narrow range of confounding variables. In the present study, common but significant moderators such as class level and lectures during the pandemic process were discussed. For instance, the problems have been experienced especially in terms of eligibility of class levels in online education platforms during the pandemic process. It was found that there is a need to study and make suggestions on whether online education can meet the needs of teachers and students.

Besides, the main forms of online education in the past were to watch the open lectures of famous universities and educational videos of institutions. In addition, online education is mainly a classroom-based teaching implemented by teachers in their own schools during the pandemic period, which is an extension of the original school education. This meta-analysis study will stand as a source to compare the effect size of the online education forms of the past decade with what is done today, and what will be done in the future.

Lastly, the heterogeneity test results of the meta-analysis study display that the effect size does not differ in terms of class level, country, online education approaches, and lecture moderators.

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

*Studies included in meta-analysis

- Ahmad S, Sumardi K, Purnawan P. Komparasi Peningkatan Hasil Belajar Antara Pembelajaran Menggunakan Sistem Pembelajaran Online Terpadu Dengan Pembelajaran Klasikal Pada Mata Kuliah Pneumatik Dan Hidrolik. Journal of Mechanical Engineering Education. 2016;2(2):286–292. doi: 10.17509/jmee.v2i2.1491. [ DOI ] [ Google Scholar ]

- Ally, M. (2004). Foundations of educational theory for online learning. Theory and Practice of Online Learning, 2 , 15–44. Retrieved on the 11th of September, 2020 from https://eddl.tru.ca/wp-content/uploads/2018/12/01_Anderson_2008-Theory_and_Practice_of_Online_Learning.pdf

- Arat, T., & Bakan, Ö. (2011). Uzaktan eğitim ve uygulamaları. Selçuk Üniversitesi Sosyal Bilimler Meslek Yüksek Okulu Dergisi , 14 (1–2), 363–374. 10.29249/selcuksbmyd.540741

- Astuti CC, Sari HMK, Azizah NL. Perbandingan Efektifitas Proses Pembelajaran Menggunakan Metode E-Learning dan Konvensional. Proceedings of the ICECRS. 2019;2(1):35–40. doi: 10.21070/picecrs.v2i1.2395. [ DOI ] [ Google Scholar ]

- *Atici, B., & Polat, O. C. (2010). Influence of the online learning environments and tools on the student achievement and opinions. Educational Research and Reviews, 5 (8), 455–464. Retrieved on the 11th of October, 2020 from https://academicjournals.org/journal/ERR/article-full-text-pdf/4C8DD044180.pdf

- Bernard RM, Abrami PC, Lou Y, Borokhovski E, Wade A, Wozney L, et al. How does distance education compare with classroom instruction? A meta- analysis of the empirical literature. Review of Educational Research. 2004;3(74):379–439. doi: 10.3102/00346543074003379. [ DOI ] [ Google Scholar ]

- Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Introduction to meta-analysis. Wiley; 2009. [ Google Scholar ]

- Borenstein, M., Hedges, L., & Rothstein, H. (2007). Meta-analysis: Fixed effect vs. random effects . UK: Wiley. [ DOI ] [ PubMed ]

- Card NA. Applied meta-analysis for social science research: Methodology in the social sciences. Guilford; 2011. [ Google Scholar ]

- *Carreon, J. R. (2018 ). Facebook as integrated blended learning tool in technology and livelihood education exploratory. Retrieved on the 1st of October, 2020 from https://files.eric.ed.gov/fulltext/EJ1197714.pdf

- Cavanaugh, C., Gillan, K. J., Kromrey, J., Hess, M., & Blomeyer, R. (2004). The effects of distance education on K-12 student outcomes: A meta-analysis. Learning Point Associates/North Central Regional Educational Laboratory (NCREL) . Retrieved on the 11th of September, 2020 from https://files.eric.ed.gov/fulltext/ED489533.pdf

- *Ceylan, V. K., & Elitok Kesici, A. (2017). Effect of blended learning to academic achievement. Journal of Human Sciences, 14 (1), 308. 10.14687/jhs.v14i1.4141

- *Chae, S. E., & Shin, J. H. (2016). Tutoring styles that encourage learner satisfaction, academic engagement, and achievement in an online environment. Interactive Learning Environments, 24(6), 1371–1385. 10.1080/10494820.2015.1009472

- *Chiang, T. H. C., Yang, S. J. H., & Hwang, G. J. (2014). An augmented reality-based mobile learning system to improve students’ learning achievements and motivations in natural science inquiry activities. Educational Technology and Society, 17 (4), 352–365. Retrieved on the 11th of September, 2020 from https://www.researchgate.net/profile/Gwo_Jen_Hwang/publication/287529242_An_Augmented_Reality-based_Mobile_Learning_System_to_Improve_Students'_Learning_Achievements_and_Motivations_in_Natural_Science_Inquiry_Activities/links/57198c4808ae30c3f9f2c4ac.pdf

- Chiao HM, Chen YL, Huang WH. Examining the usability of an online virtual tour-guiding platform for cultural tourism education. Journal of Hospitality, Leisure, Sport & Tourism Education. 2018;23(29–38):1. doi: 10.1016/j.jhlste.2018.05.002. [ DOI ] [ Google Scholar ]

- Chizmar, J. F., & Walbert, M. S. (1999). Web-based learning environments guided by principles of good teaching practice. Journal of Economic Education, 30 (3), 248–264. 10.2307/1183061

- Cleophas, T. J., & Zwinderman, A. H. (2017). Modern meta-analysis: Review and update of methodologies . Switzerland: Springer. 10.1007/978-3-319-55895-0

- Cohen, L., Manion, L., & Morrison, K. (2007). Observation. Research Methods in Education, 6 , 396–412. Retrieved on the 11th of September, 2020 from https://www.researchgate.net/profile/Nabil_Ashraf2/post/How_to_get_surface_potential_Vs_Voltage_curve_from_CV_and_GV_measurements_of_MOS_capacitor/attachment/5ac6033cb53d2f63c3c405b4/AS%3A612011817844736%401522926396219/download/Very+important_C-V+characterization+Lehigh+University+thesis.pdf

- Colis B, Moonen J. Flexible Learning in a Digital World: Experiences and Expectations. Open & Distance Learning Series. Stylus Publishing; 2001. [ Google Scholar ]

- CoSN. (2020). COVID-19 Response: Preparing to Take School Online. CoSN. (2020). COVID-19 Response: Preparing to Take School Online. Retrieved on the 3rd of September, 2021 from https://www.cosn.org/sites/default/files/COVID-19%20Member%20Exclusive_0.pdf

- Cumming, G. (2012). Understanding new statistics: Effect sizes, confidence intervals, and meta-analysis. New York, USA: Routledge. 10.4324/9780203807002

- Deeks, J. J., Higgins, J. P. T., & Altman, D. G. (2008). Analysing data and undertaking meta-analyses . In J. P. T. Higgins & S. Green (Eds.), Cochrane handbook for systematic reviews of interventions (pp. 243–296). Sussex: John Wiley & Sons. 10.1002/9780470712184.ch9

- Demiralay, R., Bayır, E. A., & Gelibolu, M. F. (2016). Öğrencilerin bireysel yenilikçilik özellikleri ile çevrimiçi öğrenmeye hazır bulunuşlukları ilişkisinin incelenmesi. Eğitim ve Öğretim Araştırmaları Dergisi, 5 (1), 161–168. 10.23891/efdyyu.2017.10

- Dinçer, S. (2014). Eğitim bilimlerinde uygulamalı meta-analiz. Pegem Atıf İndeksi, 2014(1), 1–133. 10.14527/pegem.001

- *Durak, G., Cankaya, S., Yunkul, E., & Ozturk, G. (2017). The effects of a social learning network on students’ performances and attitudes. European Journal of Education Studies, 3 (3), 312–333. 10.5281/zenodo.292951

- *Ercan, O. (2014). Effect of web assisted education supported by six thinking hats on students’ academic achievement in science and technology classes . European Journal of Educational Research, 3 (1), 9–23. 10.12973/eu-jer.3.1.9

- Ercan O, Bilen K. Effect of web assisted education supported by six thinking hats on students’ academic achievement in science and technology classes. European Journal of Educational Research. 2014;3(1):9–23. doi: 10.12973/eu-jer.3.1.9. [ DOI ] [ Google Scholar ]

- *Ercan, O., Bilen, K., & Ural, E. (2016). “Earth, sun and moon”: Computer assisted instruction in secondary school science - Achievement and attitudes. Issues in Educational Research, 26 (2), 206–224. 10.12973/eu-jer.3.1.9

- Field, A. P. (2003). The problems in using fixed-effects models of meta-analysis on real-world data. Understanding Statistics, 2 (2), 105–124. 10.1207/s15328031us0202_02

- Field, A. P., & Gillett, R. (2010). How to do a meta-analysis. British Journal of Mathematical and Statistical Psychology, 63 (3), 665–694. 10.1348/00071010x502733 [ DOI ] [ PubMed ]

- Geostat. (2019). ‘Share of households with internet access’, National statistics office of Georgia . Retrieved on the 2nd September 2020 from https://www.geostat.ge/en/modules/categories/106/information-and-communication-technologies-usage-in-households

- *Gwo-Jen, H., Nien-Ting, T., & Xiao-Ming, W. (2018). Creating interactive e-books through learning by design: The impacts of guided peer-feedback on students’ learning achievements and project outcomes in science courses. Journal of Educational Technology & Society., 21 (1), 25–36. Retrieved on the 2nd of October, 2020 https://ae-uploads.uoregon.edu/ISTE/ISTE2019/PROGRAM_SESSION_MODEL/HANDOUTS/112172923/CreatingInteractiveeBooksthroughLearningbyDesignArticle2018.pdf

- Hamdani, A. R., & Priatna, A. (2020). Efektifitas implementasi pembelajaran daring (full online) dimasa pandemi Covid-19 pada jenjang Sekolah Dasar di Kabupaten Subang. Didaktik: Jurnal Ilmiah PGSD STKIP Subang, 6 (1), 1–9.

- Hart, C. M., Berger, D., Jacob, B., Loeb, S., & Hill, M. (2019). Online learning, offline outcomes: Online course taking and high school student performance. Aera Open, 5(1).

- *Hayes, J., & Stewart, I. (2016). Comparing the effects of derived relational training and computer coding on intellectual potential in school-age children. The British Journal of Educational Psychology, 86 (3), 397–411. 10.1111/bjep.12114 [ DOI ] [ PubMed ]

- Horton WK. Designing web-based training: How to teach anyone anything anywhere anytime. Wiley Publishing; 2000. [ Google Scholar ]

- *Hwang, G. J., Wu, P. H., & Chen, C. C. (2012). An online game approach for improving students’ learning performance in web-based problem-solving activities. Computers and Education, 59 (4), 1246–1256. 10.1016/j.compedu.2012.05.009

- *Kert, S. B., Köşkeroğlu Büyükimdat, M., Uzun, A., & Çayiroğlu, B. (2017). Comparing active game-playing scores and academic performances of elementary school students. Education 3–13, 45 (5), 532–542. 10.1080/03004279.2016.1140800

- *Lai, A. F., & Chen, D. J. (2010). Web-based two-tier diagnostic test and remedial learning experiment. International Journal of Distance Education Technologies, 8 (1), 31–53. 10.4018/jdet.2010010103

- *Lai, A. F., Lai, H. Y., Chuang W. H., & Wu, Z.H. (2015). Developing a mobile learning management system for outdoors nature science activities based on 5e learning cycle. Proceedings of the International Conference on e-Learning, ICEL. Proceedings of the International Association for Development of the Information Society (IADIS) International Conference on e-Learning (Las Palmas de Gran Canaria, Spain, July 21–24, 2015). Retrieved on the 14th November 2020 from https://files.eric.ed.gov/fulltext/ED562095.pdf

- Lai CH, Lin HW, Lin RM, Tho PD. Effect of peer interaction among online learning community on learning engagement and achievement. International Journal of Distance Education Technologies (IJDET) 2019;17(1):66–77. doi: 10.4018/IJDET.2019010105. [ DOI ] [ Google Scholar ]

- Littell JH, Corcoran J, Pillai V. Systematic reviews and meta-analysis. Oxford University; 2008. [ Google Scholar ]

- *Liu, K. P., Tai, S. J. D., & Liu, C. C. (2018). Enhancing language learning through creation: the effect of digital storytelling on student learning motivation and performance in a school English course. Educational Technology Research and Development, 66 (4), 913–935. 10.1007/s11423-018-9592-z

- Machtmes, K., & Asher, J. W. (2000). A meta-analysis of the effectiveness of telecourses in distance education. American Journal of Distance Education, 14 (1), 27–46. 10.1080/08923640009527043

- Makowski, D., Piraux, F., & Brun, F. (2019). From experimental network to meta-analysis: Methods and applications with R for agronomic and environmental sciences. Dordrecht: Springer. 10.1007/978-94-024_1696-1

- * Meyers, C., Molefe, A., & Brandt, C. (2015). The Impact of the" Enhancing Missouri's Instructional Networked Teaching Strategies"(eMINTS) Program on Student Achievement, 21st-Century Skills, and Academic Engagement--Second-Year Results . Society for Research on Educational Effectiveness. Retrieved on the 14 th November, 2020 from https://files.eric.ed.gov/fulltext/ED562508.pdf

- OECD. (2020). ‘A framework to guide an education response to the COVID-19 Pandemic of 2020 ’. 10.26524/royal.37.6

- Pecoraro, V. (2018). Appraising evidence . In G. Biondi-Zoccai (Ed.), Diagnostic meta-analysis: A useful tool for clinical decision-making (pp. 99–114). Cham, Switzerland: Springer. 10.1007/978-3-319-78966-8_9

- Pigott T. Advances in meta-analysis. Springer; 2012. [ Google Scholar ]

- Pillay, H. , Irving, K., & Tones, M. (2007). Validation of the diagnostic tool for assessing Tertiary students’ readiness for online learning. Higher Education Research & Development, 26 (2), 217–234. 10.1080/07294360701310821

- Prestiadi, D., Zulkarnain, W., & Sumarsono, R. B. (2019). Visionary leadership in total quality management: efforts to improve the quality of education in the industrial revolution 4.0. In the 4th International Conference on Education and Management (COEMA 2019). Atlantis Press

- Poole, D. M. (2000). Student participation in a discussion-oriented online course: a case study. Journal of Research on Computing in Education, 33 (2), 162–177. 10.1080/08886504.2000.10782307

- Rahayu FS, Budiyanto D, Palyama D. Analisis penerimaan e-learning menggunakan technology acceptance model (Tam)(Studi Kasus: Universitas Atma Jaya Yogyakarta) Jurnal Terapan Teknologi Informasi. 2017;1(2):87–98. doi: 10.21460/jutei.2017.12.20. [ DOI ] [ Google Scholar ]

- Rasmussen RC. The quantity and quality of human interaction in a synchronous blended learning environment. Brigham Young University Press; 2003. [ Google Scholar ]

- *Ravenel, J., T. Lambeth, D., & Spires, B. (2014). Effects of computer-based programs on mathematical achievement scores for fourth-grade students. i-manager’s Journal on School Educational Technology, 10 (1), 8–21. 10.26634/jsch.10.1.2830

- Rolisca, R. U. C., & Achadiyah, B. N. (2014). Pengembangan media evaluasi pembelajaran dalam bentuk online berbasis e-learning menggunakan software wondershare quiz creator dalam mata pelajaran akuntansi SMA Brawijaya Smart School (BSS). Jurnal Pendidikan Akuntansi Indonesia, 12(2).

- Sitzmann, T., Kraiger, K., Stewart, D., & Wisher, R. (2006). The comparative effective- ness of Web-based and classroom instruction: A meta-analysis . Personnel Psychology, 59 (3), 623–664. 10.1111/j.1744-6570.2006.00049.x

- Stewart DW, Kamins MA. Developing a coding scheme and coding study reports. In: Lipsey MW, Wilson DB, editors. Practical metaanalysis: Applied social research methods series. Sage; 2001. pp. 73–90. [ Google Scholar ]

- Swan K. Research on online learning. Journal of Asynchronous Learning Networks. 2007;11(1):55–59. [ Google Scholar ]

- *Sung, H. Y., Hwang, G. J., & Chang, Y. C. (2016). Development of a mobile learning system based on a collaborative problem-posing strategy. Interactive Learning Environments, 24 (3), 456–471. 10.1080/10494820.2013.867889

- Tsagris, M., & Fragkos, K. C. (2018). Meta-analyses of clinical trials versus diagnostic test accuracy studies. In G. Biondi-Zoccai (Ed.), Diagnostic meta-analysis: A useful tool for clinical decision-making (pp. 31–42). Cham, Switzerland: Springer. 10.1007/978-3-319-78966-8_4

- UNESCO. (2020, Match 13). COVID-19 educational disruption and response. Retrieved on the 14 th November 2020 from https://en.unesco.org/themes/education-emergencies/ coronavirus-school-closures

- Usta E. The effect of web-based learning environments on attitudes of students regarding computer and internet. Procedia-Social and Behavioral Sciences. 2011;28(262–269):1. doi: 10.1016/j.sbspro.2011.11.051. [ DOI ] [ Google Scholar ]

- Usta, E. (2011b). The examination of online self-regulated learning skills in web-based learning environments in terms of different variables. Turkish Online Journal of Educational Technology-TOJET, 10 (3), 278–286. Retrieved on the 14th November 2020 from https://files.eric.ed.gov/fulltext/EJ944994.pdf

- Vrasidas, C. & MsIsaac, M. S. (2000). Principles of pedagogy and evaluation for web-based learning. Educational Media International, 37 (2), 105–111. 10.1080/095239800410405

- *Wang, C. H., & Chen, C. P. (2013). Effects of facebook tutoring on learning english as a second language. Proceedings of the International Conference e-Learning 2013, (2009), 135–142. Retrieved on the 15th November 2020 from https://files.eric.ed.gov/fulltext/ED562299.pdf

- Wei HC, Chou C. Online learning performance and satisfaction: Do perceptions and readiness matter? Distance Education. 2020;41(1):48–69. doi: 10.1080/01587919.2020.1724768. [ DOI ] [ Google Scholar ]

- *Yu, F. Y. (2019). The learning potential of online student-constructed tests with citing peer-generated questions. Interactive Learning Environments, 27 (2), 226–241. 10.1080/10494820.2018.1458040

- *Yu, F. Y., & Chen, Y. J. (2014). Effects of student-generated questions as the source of online drill-and-practice activities on learning . British Journal of Educational Technology, 45 (2), 316–329. 10.1111/bjet.12036

- *Yu, F. Y., & Pan, K. J. (2014). The effects of student question-generation with online prompts on learning. Educational Technology and Society, 17 (3), 267–279. Retrieved on the 15th November 2020 from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.565.643&rep=rep1&type=pdf

- *Yu, W. F., She, H. C., & Lee, Y. M. (2010). The effects of web-based/non-web-based problem-solving instruction and high/low achievement on students’ problem-solving ability and biology achievement. Innovations in Education and Teaching International, 47 (2), 187–199. 10.1080/14703291003718927

- Zhao, Y., Lei, J., Yan, B, Lai, C., & Tan, S. (2005). A practical analysis of research on the effectiveness of distance education. Teachers College Record, 107 (8). 10.1111/j.1467-9620.2005.00544.x

- *Zhong, B., Wang, Q., Chen, J., & Li, Y. (2017). Investigating the period of switching roles in pair programming in a primary school. Educational Technology and Society, 20 (3), 220–233. Retrieved on the 15th November 2020 from https://repository.nie.edu.sg/bitstream/10497/18946/1/ETS-20-3-220.pdf

- View on publisher site

- PDF (857.7 KB)

- Collections

Similar articles